```

Example:

```bash

npx hardhat verify 0x3972c87769886C4f1Ff3a8b52bc57738E82192D5 MockNFT Mock ipfs://QmQ2RFEmZaMds8bRjZCTJxo4DusvcBdLTS6XuDbhp5BZjY 100 --network fuji

```

You can also verify contracts programmatically via script. Example:

```ts title="verify.ts"

import console from "console"

const hre = require("hardhat")

// Define the NFT

const name = "MockNFT"

const symbol = "Mock"

const _metadataUri = "ipfs://QmQ2RFEmZaMds8bRjZCTJxo4DusvcBdLTS6XuDbhp5BZjY"

const _maxTokens = "100"

async function main() {

await hre.run("verify:verify", {

address: "0x3972c87769886C4f1Ff3a8b52bc57738E82192D5",

constructorArguments: [name, symbol, _metadataUri, _maxTokens],

})

}

main()

.then(() => process.exit(0))

.catch((error) => {

console.error(error)

process.exit(1)

})

```

First create your script, then execute it via hardhat by running the following:

```bash

npx hardhat run scripts/verify.ts --network fuji

```

Verifying via terminal will not allow you to pass an array as an argument, however, you can do this when verifying via script by including the array in your *Constructor Arguments*. Example:

```ts

import console from "console"

const hre = require("hardhat")

// Define the NFT

const name = "MockNFT"

const symbol = "Mock"

const _metadataUri =

"ipfs://QmQn2jepp3jZ3tVxoCisMMF8kSi8c5uPKYxd71xGWG38hV/Example"

const _royaltyRecipient = "0xcd3b766ccdd6ae721141f452c550ca635964ce71"

const _royaltyValue = "50000000000000000"

const _custodians = [

"0x8626f6940e2eb28930efb4cef49b2d1f2c9c1199",

"0xf39fd6e51aad88f6f4ce6ab8827279cfffb92266",

"0xdd2fd4581271e230360230f9337d5c0430bf44c0",

]

const _saleLength = "172800"

const _claimAddress = "0xcd3b766ccdd6ae721141f452c550ca635964ce71"

async function main() {

await hre.run("verify:verify", {

address: "0x08bf160B8e56899723f2E6F9780535241F145470",

constructorArguments: [

name,

symbol,

_metadataUri,

_royaltyRecipient,

_royaltyValue,

_custodians,

_saleLength,

_claimAddress,

],

})

}

main()

.then(() => process.exit(0))

.catch((error) => {

console.error(error)

process.exit(1)

})

```

# Using Snowtrace

URL: /docs/dapps/verify-contract/snowtrace

Learn how to verify a contract on the Avalanche C-chain using Snowtrace.

The C-Chain Explorer supports verifying smart contracts, allowing users to review it. The Mainnet C-Chain Explorer is [here](https://snowtrace.io/) and the Fuji Testnet Explorer is [here.](https://testnet.snowtrace.io/)

If you have issues, contact us on [Discord](https://chat.avalabs.org/).

## Steps

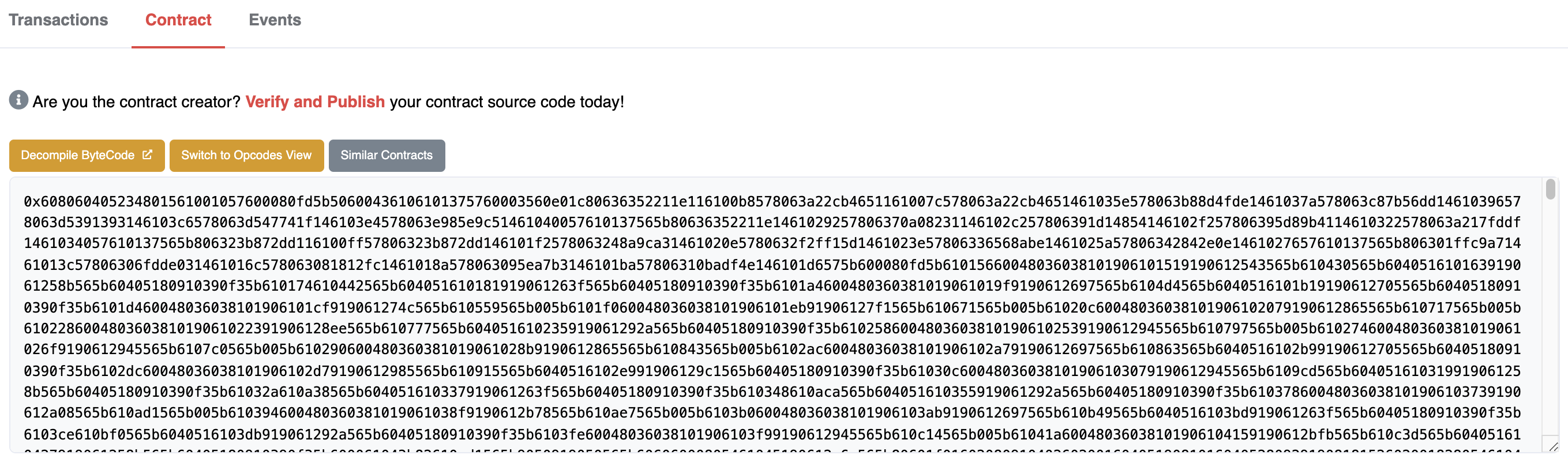

Navigate to the *Contract* tab at the Explorer page for your contract's address.

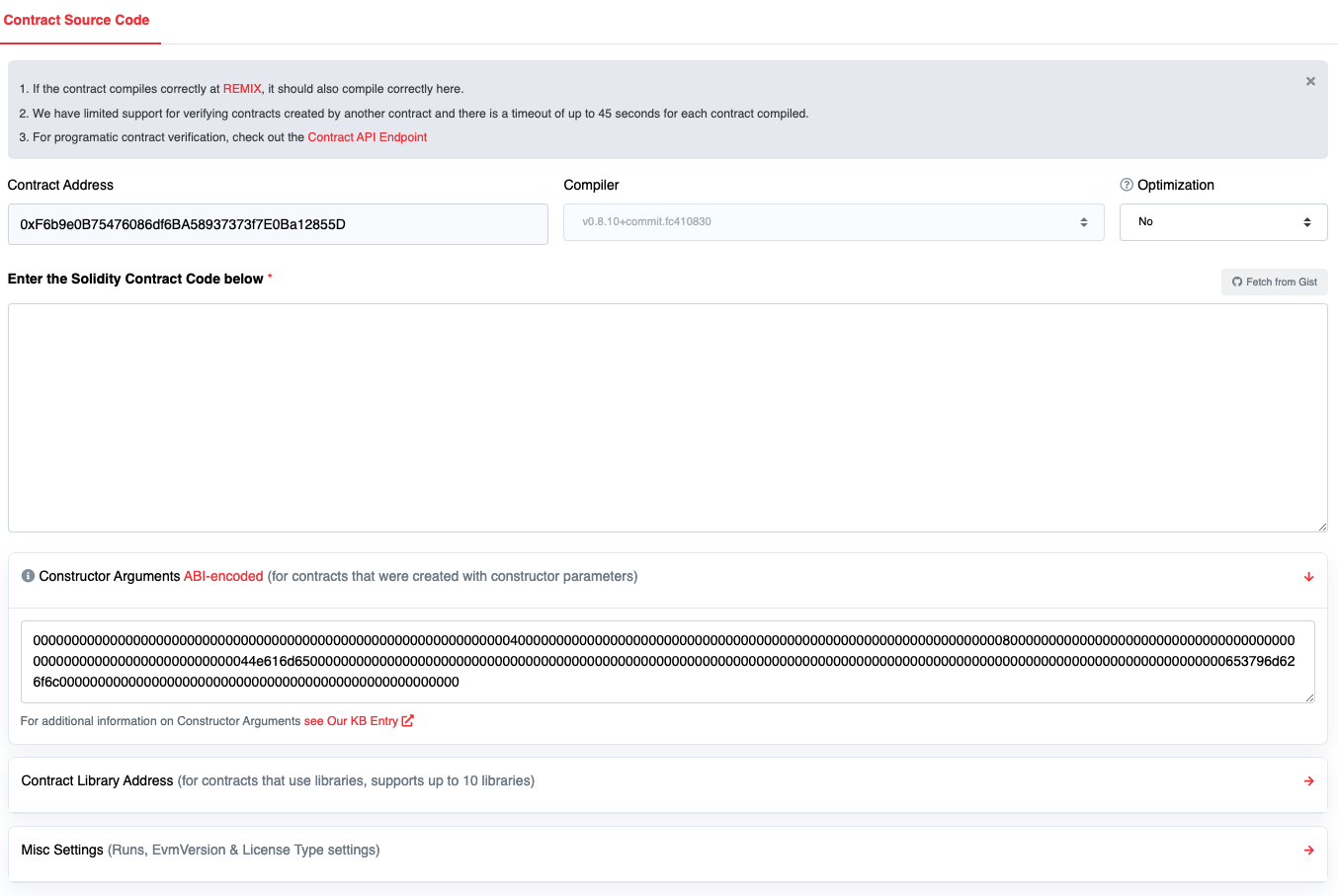

Click *Verify & Publish* to enter the smart contract verification page.

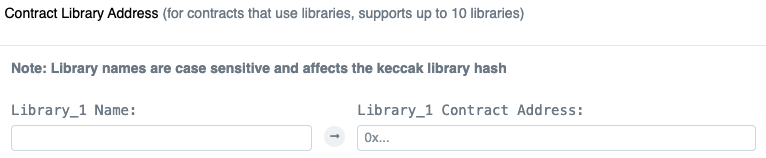

[Libraries](https://docs.soliditylang.org/en/v0.8.4/contracts.html?highlight=libraries#libraries) can be provided. If they are, they must be deployed, independently verified and in the *Add Contract Libraries* section.

The C-Chain Explorer can fetch constructor arguments automatically for simple smart contracts. More complicated contracts might require you to pass in special constructor arguments. Smart contracts with complicated constructors [may have validation issues](/docs/dapps/verify-contract/snowtrace#caveats). You can try this [online ABI encoder](https://abi.hashex.org/).

## Requirements

* **IMPORTANT** Contracts should be verified on Testnet before being deployed to Mainnet to ensure there are no issues.

* Contracts must be flattened. Includes will not work.

* Contracts should be compile-able in [Remix](https://remix.ethereum.org/). A flattened contract with `pragma experimental ABIEncoderV2` (as an example) can create unusual binary and/or constructor blobs. This might cause validation issues.

* The C-Chain Explorer **only** validates [solc JavaScript](https://github.com/ethereum/solc-bin) and only supports [Solidity](https://docs.soliditylang.org/) contracts.

## Libraries

The compile bytecode will identify if there are external libraries. If you released with Remix, you will also see multiple transactions created.

```

{

"linkReferences": {

"contracts/Storage.sol": {

"MathUtils": [

{

"length": 20,

"start": 3203

}

...

]

}

},

"object": "....",

...

}

```

This requires you to add external libraries in order to verify the code.

A library can have dependent libraries. To verify a library, the hierarchy of dependencies will need to be provided to the C-Chain Explorer. Verification may fail if you provide more than the library plus any dependencies (that is you might need to prune the Solidity code to exclude anything but the necessary classes).

You can also see references in the byte code in the form `__$75f20d36....$__`. The keccak256 hash is generated from the library name.

Example [online converter](https://emn178.github.io/online-tools/keccak_256.html): `contracts/Storage.sol:MathUtils` => `75f20d361629befd780a5bd3159f017ee0f8283bdb6da80805f83e829337fd12`

## Examples

* [SwapFlashLoan](https://testnet.snowtrace.io/address/0x12DF75Fed4DEd309477C94cE491c67460727C0E8/contract/43113/code)

SwapFlashLoan uses `swaputils` and `mathutils`:

* [SwapUtils](https://testnet.snowtrace.io/address/0x6703e4660E104Af1cD70095e2FeC337dcE034dc1/contract/43113/code)

SwapUtils requires mathutils:

* [MathUtils](https://testnet.snowtrace.io/address/0xbA21C84E4e593CB1c6Fe6FCba340fa7795476966/contract/43113/code)

## Caveats

### SPDX License Required

An SPDX must be provided.

```solidity

// SPDX-License-Identifier: ...

```

### `keccak256` Strings Processed

The C-Chain Explorer interprets all keccak256(...) strings, even those in comments. This can cause issues with constructor arguments.

```solidity

/// keccak256("1");

keccak256("2");

```

This could cause automatic constructor verification failures. If you receive errors about constructor arguments they can be provided in ABI hex encoded form on the contract verification page.

### Solidity Constructors

Constructors and inherited constructors can cause problems verifying the constructor arguments. Example:

```solidity

abstract contract Parent {

constructor () {

address msgSender = ...;

emit Something(address(0), msgSender);

}

}

contract Main is Parent {

constructor (

string memory _name,

address deposit,

uint fee

) {

...

}

}

```

If you receive errors about constructor arguments, they can be provided in ABI hex encoded form on the contract verification page.

# Fuji Testnet

URL: /docs/quick-start/networks/fuji-testnet

Learn about the official Testnet for the Avalanche ecosystem.

Fuji's infrastructure imitates Avalanche Mainnet. It's comprised of a [Primary Network](/docs/quick-start/primary-network) formed by instances of X, P, and C-Chain, as well as many test Avalanche L1s.

## When to Use Fuji

Fuji provides users with a platform to simulate the conditions found in the Mainnet environment. It enables developers to deploy demo Smart Contracts, allowing them to test and refine their applications before deploying them on the [Primary Network](/docs/quick-start/primary-network).

Users interested in experimenting with Avalanche can receive free testnet AVAX, allowing them to explore the platform without any risk. These testnet tokens have no value in the real world and are only meant for experimentation purposes within the Fuji test network.

To receive testnet tokens, users can request funds from the [Avalanche Faucet](/docs/dapps/smart-contract-dev/get-test-funds). If there's already an AVAX balance greater than zero on Mainnet, paste the C-Chain address there, and request test tokens. Otherwise, please request a faucet coupon on [Guild](https://guild.xyz/avalanche). Admins and mods on the official [Discord](https://discord.com/invite/RwXY7P6) can provide testnet AVAX if developers are unable to obtain it from the other two options.

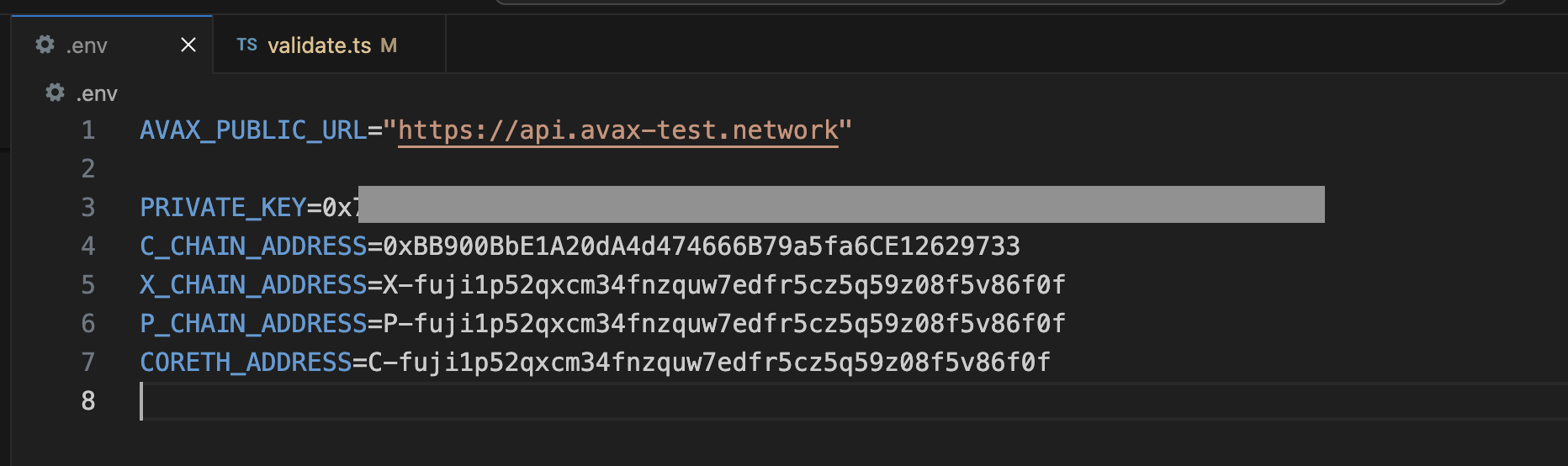

## Add Avalanche C-Chain Testnet to Wallet

* **Network Name**: Avalanche Fuji C-Chain

* **RPC URL**: [https://api.avax-test.network/ext/bc/C/rpc](https://api.avax-test.network/ext/bc/C/rpc)

* **WebSocket URL**: wss\://api.avax-test.network/ext/bc/C/ws

* **ChainID**: `43113`

* **Symbol**: `AVAX`

* **Explorer**: [https://subnets-test.avax.network/c-chain](https://subnets-test.avax.network/c-chain)

Head over to explorer linked above and select "Add Avalanche C-Chain to Wallet" under "Chain Info" to automatically add the network.

## Additional Details

* Fuji Testnet has its own dedicated [block explorer](https://subnets-test.avax.network/).

* The Public API endpoint for Fuji is not the same as Mainnet. More info is available in the [Public API Server](/docs/tooling/rpc-providers) documentation.

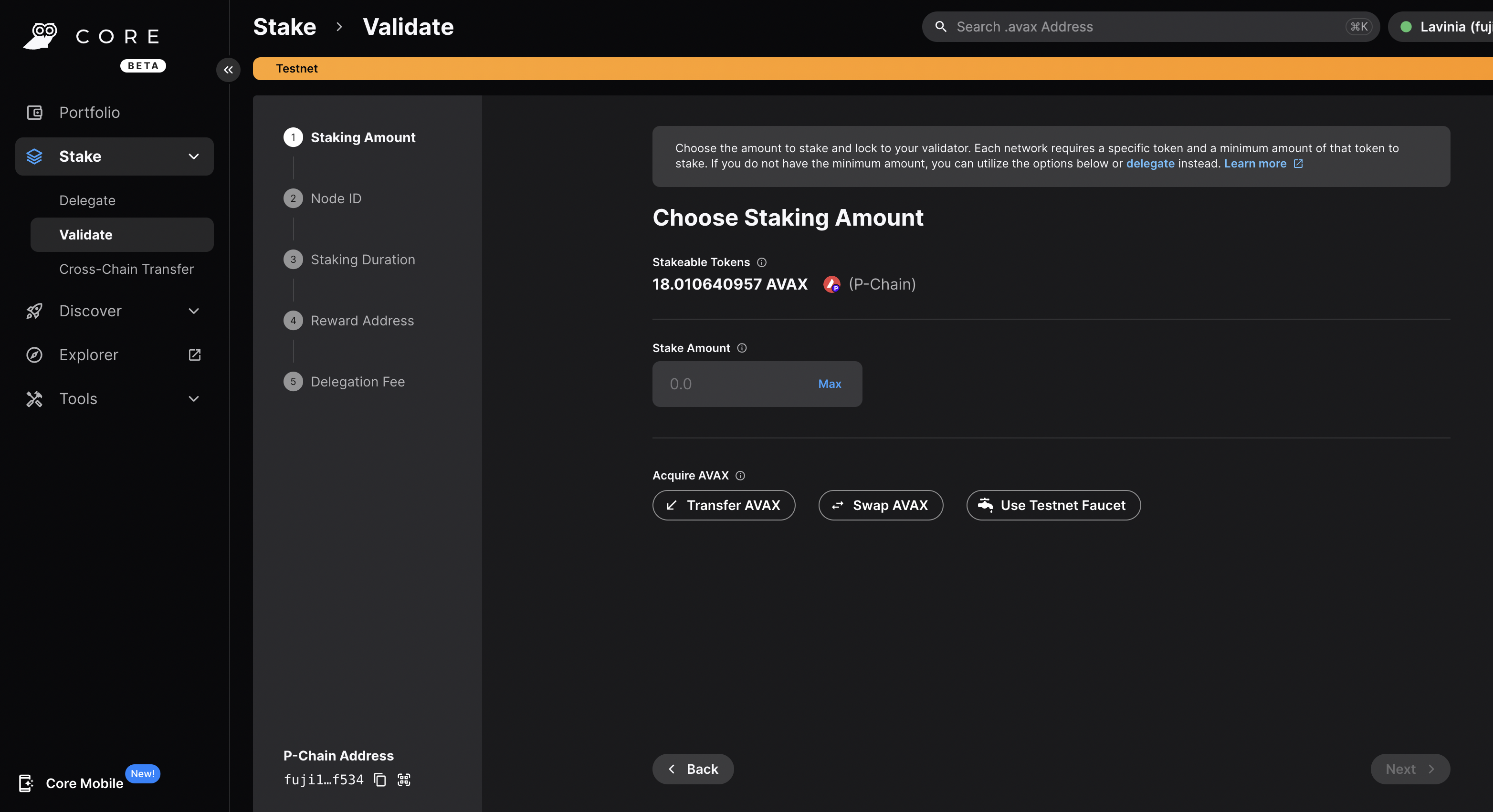

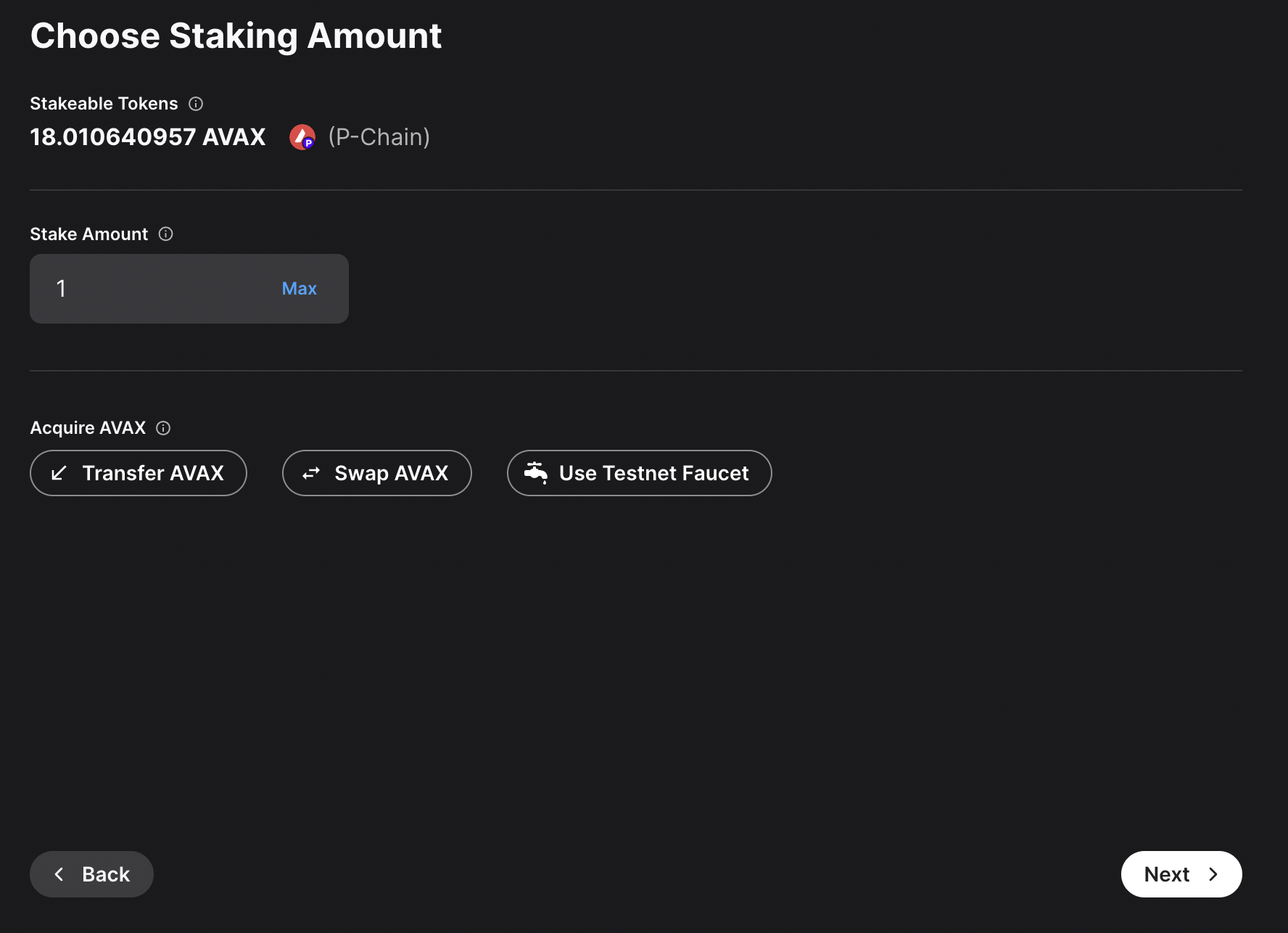

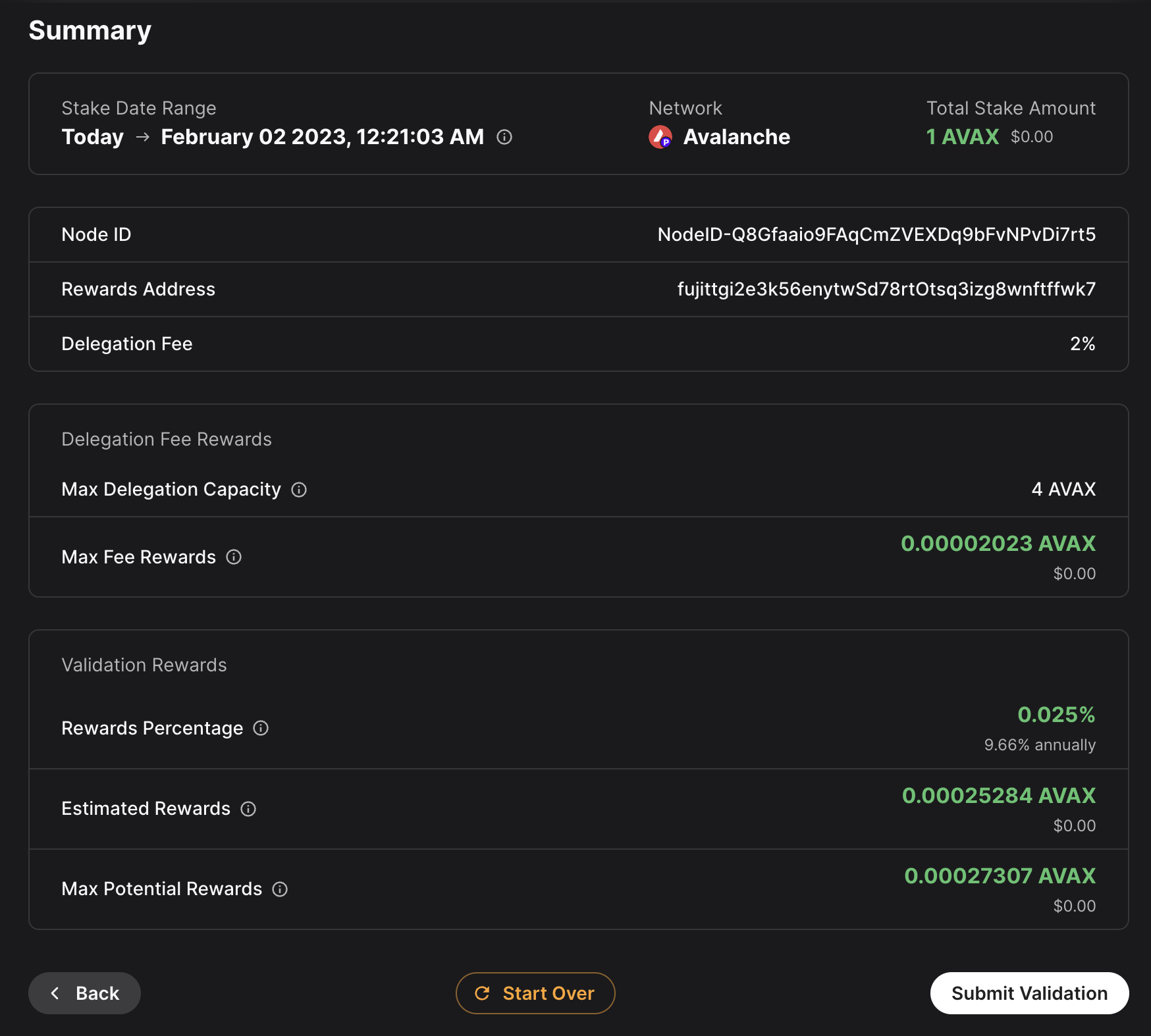

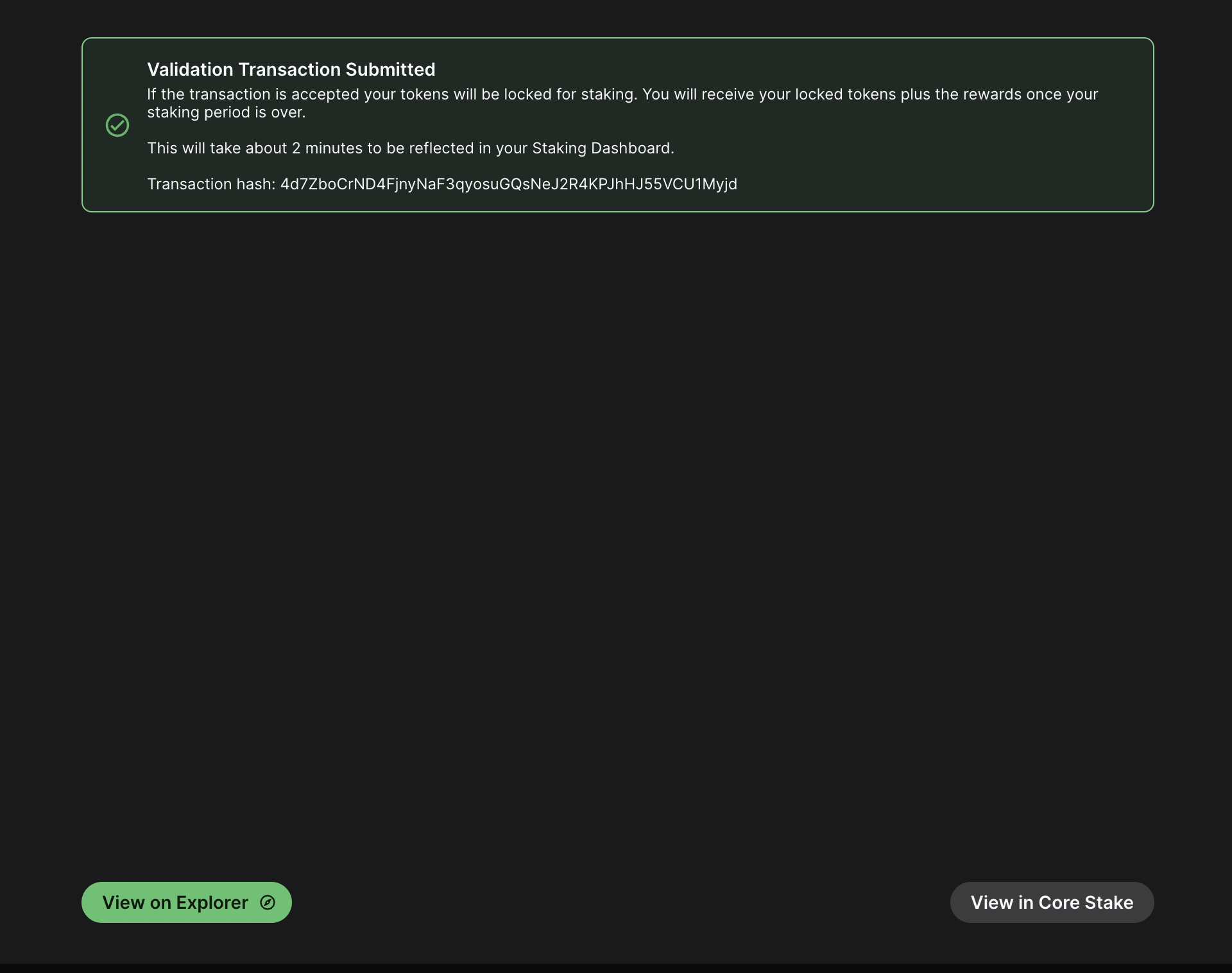

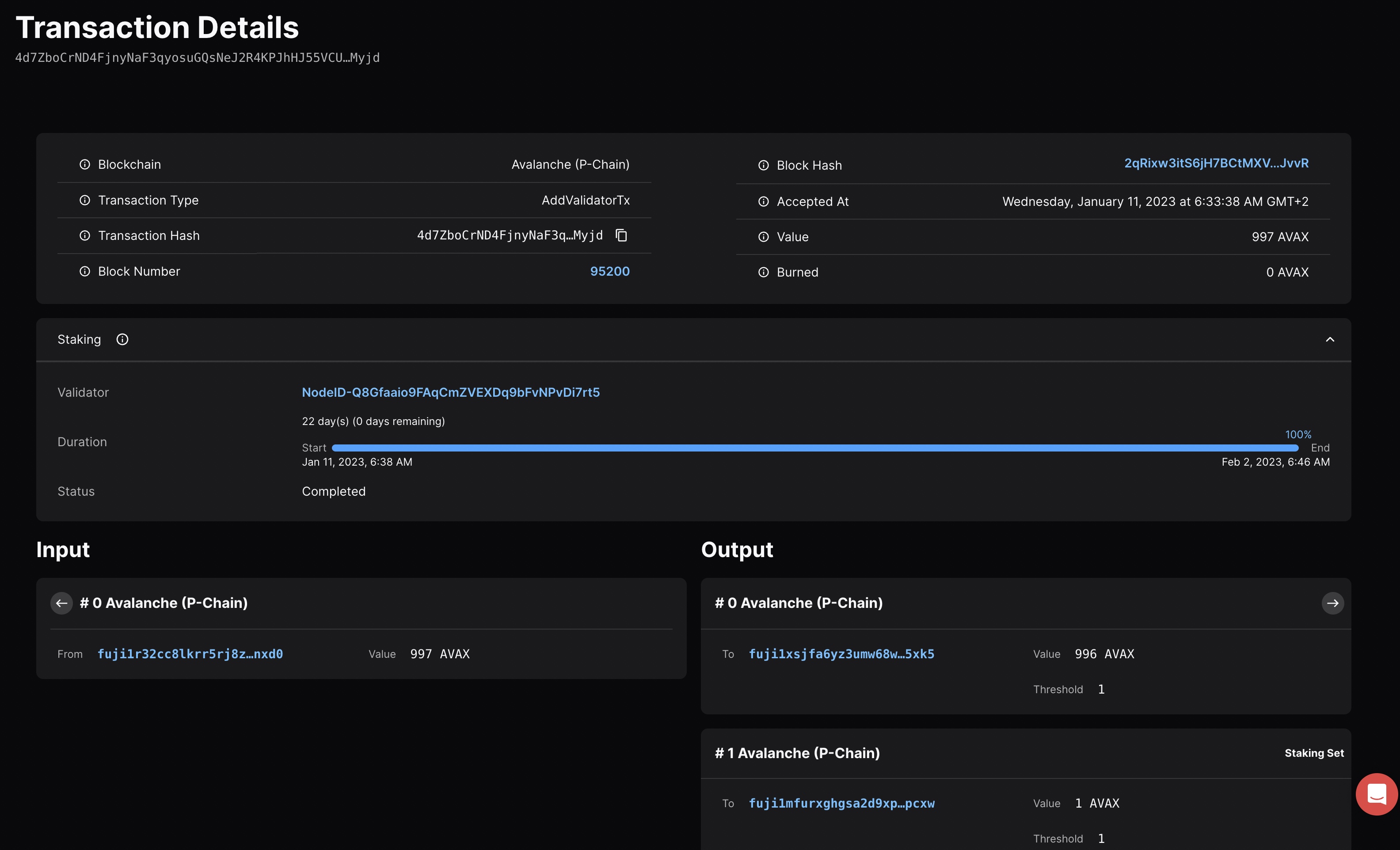

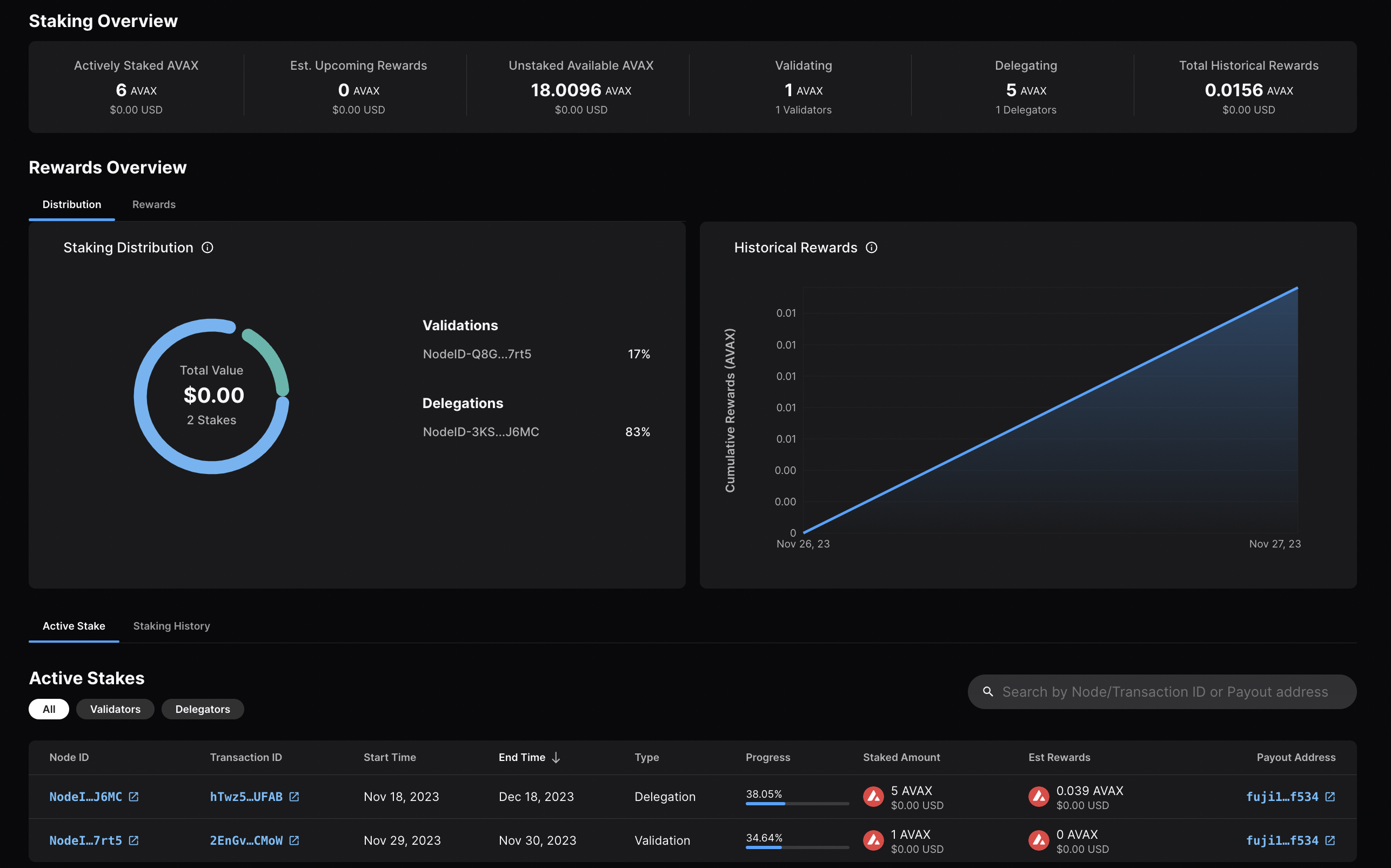

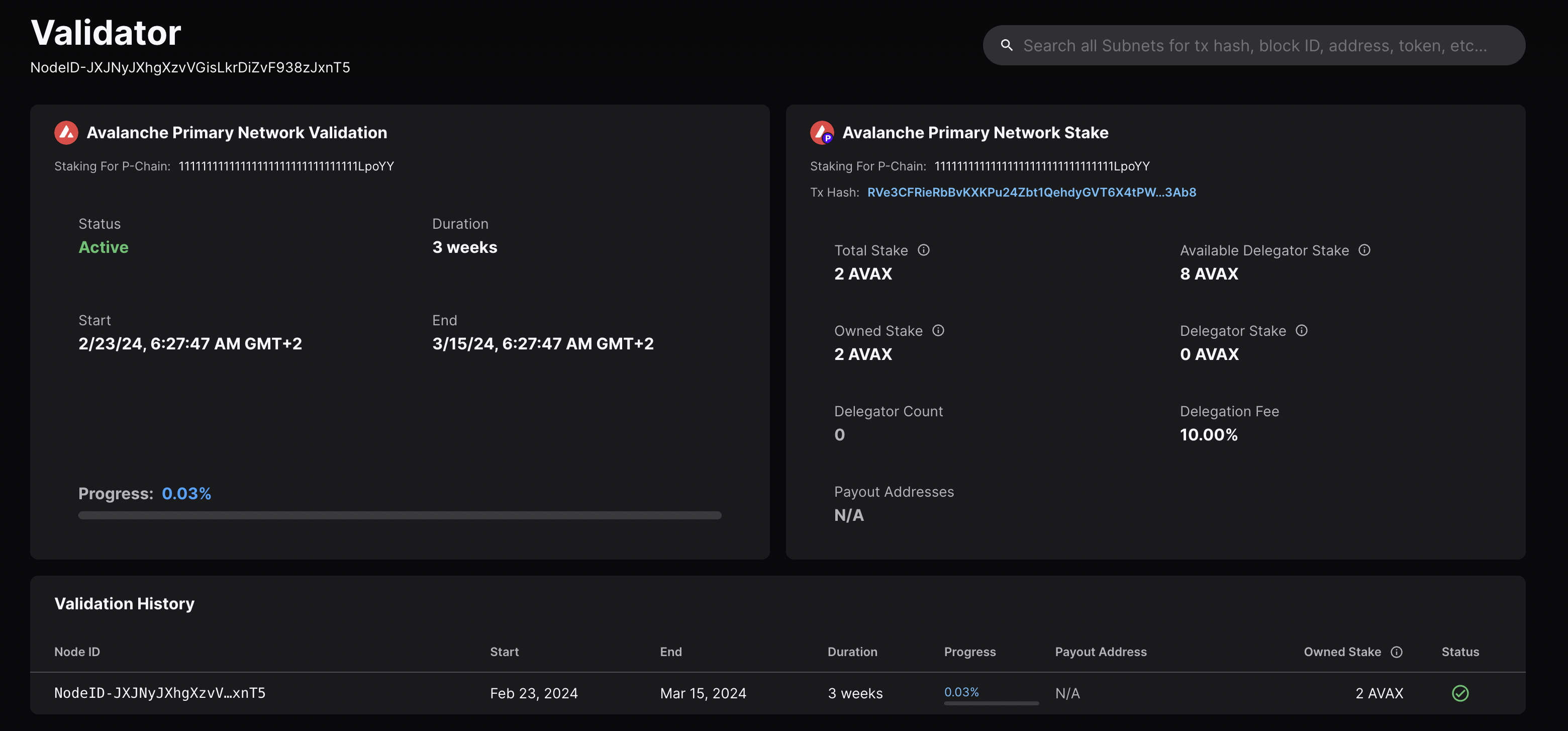

* You can run a Fuji validator node by staking only **1 Fuji AVAX**.

# Avalanche Mainnet

URL: /docs/quick-start/networks/mainnet

Learn about the Avalanche Mainnet.

The Avalanche Mainnet refers to the main network of the Avalanche blockchain where real transactions and smart contract executions occur. It is the final and production-ready version of the blockchain where users can interact with the network and transact with real world assets.

A *network of networks*, Avalanche Mainnet includes the [Primary Network](/docs/quick-start/primary-network) formed by the X, P, and C-Chain, as well as all in-production [Avalanche L1s](/docs/quick-start/avalanche-l1s).

These Avalanche L1s are independent blockchain sub-networks that can be tailored to specific application use cases, use their own consensus mechanisms, define their own token economics, and be run by different [virtual machines](/docs/quick-start/virtual-machines).

## Add Avalanche C-Chain Mainnet to Wallet

* **Network Name**: Avalanche Mainnet C-Chain

* **RPC URL**: [https://api.avax.network/ext/bc/C/rpc](https://api.avax.network/ext/bc/C/rpc)

* **WebSocket URL**: wss\://api.avax.network/ext/bc/C/ws

* **ChainID**: `43114`

* **Symbol**: `AVAX`

* **Explorer**: [https://subnets.avax.network/c-chain](https://subnets.avax.network/c-chain)

Head over to explorer linked above and select "Add Avalanche C-Chain to Wallet" under "Chain Info" to automatically add the network.

# C-Chain Configurations

URL: /docs/nodes/chain-configs/c-chain

This page describes the configuration options available for the C-Chain.

In order to specify a config for the C-Chain, a JSON config file should be placed at `{chain-config-dir}/C/config.json`. This file does not exist by default.

For example if `chain-config-dir` has the default value which is `$HOME/.avalanchego/configs/chains`, then `config.json` should be placed at `$HOME/.avalanchego/configs/chains/C/config.json`.

The C-Chain config is printed out in the log when a node starts. Default values for each config flag are specified below.

Default values are overridden only if specified in the given config file. It is recommended to only provide values which are different from the default, as that makes the config more resilient to future default changes. Otherwise, if defaults change, your node will remain with the old values, which might adversely affect your node operation.

## Gas Configuration

### `gas-target`

*Integer*

The target gas per second that this node will attempt to use when creating blocks. If this config is not specified, the node will default to use the parent block's target gas per second. Defaults to using the parent block's target.

## State Sync

### `state-sync-enabled`

*Boolean*

Set to `true` to start the chain with state sync enabled. The peer will download chain state from peers up to a recent block near tip, then proceed with normal bootstrapping.

Defaults to perform state sync if starting a new node from scratch. However, if running with an existing database it will default to false and not perform state sync on subsequent runs.

Please note that if you need historical data, state sync isn't the right option. However, it is sufficient if you are just running a validator.

### `state-sync-skip-resume`

*Boolean*

If set to `true`, the chain will not resume a previously started state sync operation that did not complete. Normally, the chain should be able to resume state syncing without any issue. Defaults to `false`.

### `state-sync-min-blocks`

*Integer*

Minimum number of blocks the chain should be ahead of the local node to prefer state syncing over bootstrapping. If the node's database is already close to the chain's tip, bootstrapping is more efficient. Defaults to `300000`.

### `state-sync-ids`

*String*

Comma separated list of node IDs (prefixed with `NodeID-`) to fetch state sync data from. An example setting of this field would be `--state-sync-ids="NodeID-7Xhw2mDxuDS44j42TCB6U5579esbSt3Lg,NodeID-MFrZFVCXPv5iCn6M9K6XduxGTYp891xXZ"`. If not specified (or empty), peers are selected at random. Defaults to empty string (`""`).

### `state-sync-server-trie-cache`

*Integer*

Size of trie cache used for providing state sync data to peers in MBs. Should be a multiple of `64`. Defaults to `64`.

### `state-sync-commit-interval`

*Integer*

Specifies the commit interval at which to persist EVM and atomic tries during state sync. Defaults to `16384`.

### `state-sync-request-size`

*Integer*

The number of key/values to ask peers for per state sync request. Defaults to `1024`.

## Continuous Profiling

### `continuous-profiler-dir`

*String*

Enables the continuous profiler (captures a CPU/Memory/Lock profile at a specified interval). Defaults to `""`. If a non-empty string is provided, it enables the continuous profiler and specifies the directory to place the profiles in.

### `continuous-profiler-frequency`

*Duration*

Specifies the frequency to run the continuous profiler. Defaults `900000000000` nano seconds which is 15 minutes.

### `continuous-profiler-max-files`

*Integer*

Specifies the maximum number of profiles to keep before removing the oldest. Defaults to `5`.

## Enabling Avalanche Specific APIs

### `admin-api-enabled`

*Boolean*

Enables the Admin API. Defaults to `false`.

### `admin-api-dir`

*String*

Specifies the directory for the Admin API to use to store CPU/Mem/Lock Profiles. Defaults to `""`.

### `warp-api-enabled`

*Boolean*

Enables the Warp API. Defaults to `false`.

### Enabling EVM APIs

### `eth-apis` (\[]string)

Use the `eth-apis` field to specify the exact set of below services to enable on your node. If this field is not set, then the default list will be: `["eth","eth-filter","net","web3","internal-eth","internal-blockchain","internal-transaction"]`.

The names used in this configuration flag have been updated in Coreth `v0.8.14`. The previous names containing `public-` and `private-` are deprecated. While the current version continues to accept deprecated values, they may not be supported in future updates and updating to the new values is recommended.

The mapping of deprecated values and their updated equivalent follows:

| Deprecated | Use instead |

| -------------------------------- | -------------------- |

| public-eth | eth |

| public-eth-filter | eth-filter |

| private-admin | admin |

| private-debug | debug |

| public-debug | debug |

| internal-public-eth | internal-eth |

| internal-public-blockchain | internal-blockchain |

| internal-public-transaction-pool | internal-transaction |

| internal-public-tx-pool | internal-tx-pool |

| internal-public-debug | internal-debug |

| internal-private-debug | internal-debug |

| internal-public-account | internal-account |

| internal-private-personal | internal-personal |

If you populate this field, it will override the defaults so you must include every service you wish to enable.

### `eth`

The API name `public-eth` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `eth`.

Adds the following RPC calls to the `eth_*` namespace. Defaults to `true`.

`eth_coinbase` `eth_etherbase`

### `eth-filter`

The API name `public-eth-filter` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `eth-filter`.

Enables the public filter API for the `eth_*` namespace. Defaults to `true`.

Adds the following RPC calls (see [here](https://eth.wiki/json-rpc/API) for complete documentation):

* `eth_newPendingTransactionFilter`

* `eth_newPendingTransactions`

* `eth_newAcceptedTransactions`

* `eth_newBlockFilter`

* `eth_newHeads`

* `eth_logs`

* `eth_newFilter`

* `eth_getLogs`

* `eth_uninstallFilter`

* `eth_getFilterLogs`

* `eth_getFilterChanges`

### `admin`

The API name `private-admin` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `admin`.

Adds the following RPC calls to the `admin_*` namespace. Defaults to `false`.

* `admin_importChain`

* `admin_exportChain`

### `debug`

The API names `private-debug` and `public-debug` are deprecated as of v1.7.15, and the APIs previously under these names have been migrated to `debug`.

Adds the following RPC calls to the `debug_*` namespace. Defaults to `false`.

* `debug_dumpBlock`

* `debug_accountRange`

* `debug_preimage`

* `debug_getBadBlocks`

* `debug_storageRangeAt`

* `debug_getModifiedAccountsByNumber`

* `debug_getModifiedAccountsByHash`

* `debug_getAccessibleState`

### `net`

Adds the following RPC calls to the `net_*` namespace. Defaults to `true`.

* `net_listening`

* `net_peerCount`

* `net_version`

Note: Coreth is a virtual machine and does not have direct access to the networking layer, so `net_listening` always returns true and `net_peerCount` always returns 0. For accurate metrics on the network layer, users should use the AvalancheGo APIs.

### `debug-tracer`

Adds the following RPC calls to the `debug_*` namespace. Defaults to `false`.

* `debug_traceChain`

* `debug_traceBlockByNumber`

* `debug_traceBlockByHash`

* `debug_traceBlock`

* `debug_traceBadBlock`

* `debug_intermediateRoots`

* `debug_traceTransaction`

* `debug_traceCall`

### `web3`

Adds the following RPC calls to the `web3_*` namespace. Defaults to `true`.

* `web3_clientVersion`

* `web3_sha3`

### `internal-eth`

The API name `internal-public-eth` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `internal-eth`.

Adds the following RPC calls to the `eth_*` namespace. Defaults to `true`.

* `eth_gasPrice`

* `eth_baseFee`

* `eth_maxPriorityFeePerGas`

* `eth_feeHistory`

### `internal-blockchain`

The API name `internal-public-blockchain` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `internal-blockchain`.

Adds the following RPC calls to the `eth_*` namespace. Defaults to `true`.

* `eth_chainId`

* `eth_blockNumber`

* `eth_getBalance`

* `eth_getProof`

* `eth_getHeaderByNumber`

* `eth_getHeaderByHash`

* `eth_getBlockByNumber`

* `eth_getBlockByHash`

* `eth_getUncleBlockByNumberAndIndex`

* `eth_getUncleBlockByBlockHashAndIndex`

* `eth_getUncleCountByBlockNumber`

* `eth_getUncleCountByBlockHash`

* `eth_getCode`

* `eth_getStorageAt`

* `eth_call`

* `eth_estimateGas`

* `eth_createAccessList`

### `internal-transaction`

The API name `internal-public-transaction-pool` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `internal-transaction`.

Adds the following RPC calls to the `eth_*` namespace. Defaults to `true`.

* `eth_getBlockTransactionCountByNumber`

* `eth_getBlockTransactionCountByHash`

* `eth_getTransactionByBlockNumberAndIndex`

* `eth_getTransactionByBlockHashAndIndex`

* `eth_getRawTransactionByBlockNumberAndIndex`

* `eth_getRawTransactionByBlockHashAndIndex`

* `eth_getTransactionCount`

* `eth_getTransactionByHash`

* `eth_getRawTransactionByHash`

* `eth_getTransactionReceipt`

* `eth_sendTransaction`

* `eth_fillTransaction`

* `eth_sendRawTransaction`

* `eth_sign`

* `eth_signTransaction`

* `eth_pendingTransactions`

* `eth_resend`

### `internal-tx-pool`

The API name `internal-public-tx-pool` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `internal-tx-pool`.

Adds the following RPC calls to the `txpool_*` namespace. Defaults to `false`.

* `txpool_content`

* `txpool_contentFrom`

* `txpool_status`

* `txpool_inspect`

### `internal-debug`

The API names `internal-private-debug` and `internal-public-debug` are deprecated as of v1.7.15, and the APIs previously under these names have been migrated to `internal-debug`.

Adds the following RPC calls to the `debug_*` namespace. Defaults to `false`.

* `debug_getHeaderRlp`

* `debug_getBlockRlp`

* `debug_printBlock`

* `debug_chaindbProperty`

* `debug_chaindbCompact`

### `debug-handler`

Adds the following RPC calls to the `debug_*` namespace. Defaults to `false`.

* `debug_verbosity`

* `debug_vmodule`

* `debug_backtraceAt`

* `debug_memStats`

* `debug_gcStats`

* `debug_blockProfile`

* `debug_setBlockProfileRate`

* `debug_writeBlockProfile`

* `debug_mutexProfile`

* `debug_setMutexProfileFraction`

* `debug_writeMutexProfile`

* `debug_writeMemProfile`

* `debug_stacks`

* `debug_freeOSMemory`

* `debug_setGCPercent`

### `internal-account`

The API name `internal-public-account` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `internal-account`.

Adds the following RPC calls to the `eth_*` namespace. Defaults to `true`.

* `eth_accounts`

### `internal-personal`

The API name `internal-private-personal` is deprecated as of v1.7.15, and the APIs previously under this name have been migrated to `internal-personal`.

Adds the following RPC calls to the `personal_*` namespace. Defaults to `false`.

* `personal_listAccounts`

* `personal_listWallets`

* `personal_openWallet`

* `personal_deriveAccount`

* `personal_newAccount`

* `personal_importRawKey`

* `personal_unlockAccount`

* `personal_lockAccount`

* `personal_sendTransaction`

* `personal_signTransaction`

* `personal_sign`

* `personal_ecRecover`

* `personal_signAndSendTransaction`

* `personal_initializeWallet`

* `personal_unpair`

## API Configuration

### `rpc-gas-cap`

*Integer*

The maximum gas to be consumed by an RPC Call (used in `eth_estimateGas` and `eth_call`). Defaults to `50,000,000`.

### `rpc-tx-fee-cap`

*Integer*

Global transaction fee (price \* `gaslimit`) cap (measured in AVAX) for send-transaction variants. Defaults to `100`.

### `api-max-duration`

*Duration*

Maximum API call duration. If API calls exceed this duration, they will time out. Defaults to `0` (no maximum).

### `api-max-blocks-per-request`

*Integer*

Maximum number of blocks to serve per `getLogs` request. Defaults to `0` (no maximum).

### `ws-cpu-refill-rate`

*Duration*

The refill rate specifies the maximum amount of CPU time to allot a single connection per second. Defaults to no maximum (`0`).

### `ws-cpu-max-stored`

*Duration*

Specifies the maximum amount of CPU time that can be stored for a single WS connection. Defaults to no maximum (`0`).

### `allow-unfinalized-queries`

Allows queries for unfinalized (not yet accepted) blocks/transactions. Defaults to `false`.

### `accepted-cache-size`

*Integer*

Specifies the depth to keep accepted headers and accepted logs in the cache. This is particularly useful to improve the performance of `eth_getLogs` for recent logs. Defaults to `32`.

### `http-body-limit`

*Integer*

Maximum size in bytes for HTTP request bodies. Defaults to `0` (no limit).

## Transaction Pool

### `local-txs-enabled`

*Boolean*

Enables local transaction handling (prioritizes transactions submitted through this node). Defaults to `false`.

### `allow-unprotected-txs`

*Boolean*

If `true`, the APIs will allow transactions that are not replay protected (EIP-155) to be issued through this node. Defaults to `false`.

### `allow-unprotected-tx-hashes`

*\[]TxHash*

Specifies an array of transaction hashes that should be allowed to bypass replay protection. This flag is intended for node operators that want to explicitly allow specific transactions to be issued through their API. Defaults to an empty list.

### `price-options-slow-fee-percentage`

*Integer*

Percentage to apply for slow fee estimation. Defaults to `95`.

### `price-options-fast-fee-percentage`

*Integer*

Percentage to apply for fast fee estimation. Defaults to `105`.

### `price-options-max-tip`

*Integer*

Maximum tip in wei for fee estimation. Defaults to `20000000000` (20 Gwei).

#### `push-gossip-percent-stake`

*Float*

Percentage of the total stake to send transactions received over the RPC. Defaults to 0.9.

### `push-gossip-num-validators`

*Integer*

Number of validators to initially send transactions received over the RPC. Defaults to 100.

### `push-gossip-num-peers`

*Integer*

Number of peers to initially send transactions received over the RPC. Defaults to 0.

### `push-regossip-num-validators`

*Integer*

Number of validators to periodically send transactions received over the RPC. Defaults to 10.

### `push-regossip-num-peers`

*Integer*

Number of peers to periodically send transactions received over the RPC. Defaults to 0.

### `push-gossip-frequency`

*Duration*

Frequency to send transactions received over the RPC to peers. Defaults to `100000000` nano seconds which is 100 milliseconds.

### `pull-gossip-frequency`

*Duration*

Frequency to request transactions from peers. Defaults to `1000000000` nano seconds which is 1 second.

### `regossip-frequency`

*Duration*

Amount of time that should elapse before we attempt to re-gossip a transaction that was already gossiped once. Defaults to `30000000000` nano seconds which is 30 seconds.

### `tx-pool-price-limit`

*Integer*

Minimum gas price to enforce for acceptance into the pool. Defaults to 1 wei.

### `tx-pool-price-bump`

*Integer*

Minimum price bump percentage to replace an already existing transaction (nonce). Defaults to 10%.

### `tx-pool-account-slots`

*Integer*

Number of executable transaction slots guaranteed per account. Defaults to 16.

### `tx-pool-global-slots`

*Integer*

Maximum number of executable transaction slots for all accounts. Defaults to 5120.

### `tx-pool-account-queue`

*Integer*

Maximum number of non-executable transaction slots permitted per account. Defaults to 64.

### `tx-pool-global-queue`

*Integer*

Maximum number of non-executable transaction slots for all accounts. Defaults to 1024.

### `tx-pool-lifetime`

*Duration*

Maximum duration a non-executable transaction will be allowed in the poll. Defaults to `600000000000` nano seconds which is 10 minutes.

## Metrics

### `metrics-expensive-enabled`

*Boolean*

Enables expensive metrics. Defaults to `true`.

## Snapshots

### `snapshot-wait`

*Boolean*

If `true`, waits for snapshot generation to complete before starting. Defaults to `false`.

### `snapshot-verification-enabled`

*Boolean*

If `true`, verifies the complete snapshot after it has been generated. Defaults to `false`.

## Logging

### `log-level`

*String*

Defines the log level for the chain. Must be one of `"trace"`, `"debug"`, `"info"`, `"warn"`, `"error"`, `"crit"`. Defaults to `"info"`.

### `log-json-format`

*Boolean*

If `true`, changes logs to JSON format. Defaults to `false`.

## Keystore Settings

### `keystore-directory`

*String*

The directory that contains private keys. Can be given as a relative path. If empty, uses a temporary directory at `coreth-keystore`. Defaults to the empty string (`""`).

### `keystore-external-signer`

*String*

Specifies an external URI for a clef-type signer. Defaults to the empty string (`""` as not enabled).

### `keystore-insecure-unlock-allowed`

*Boolean*

If `true`, allow users to unlock accounts in unsafe HTTP environment. Defaults to `false`.

## Database

### `trie-clean-cache`

*Integer*

Size of cache used for clean trie nodes (in MBs). Should be a multiple of `64`. Defaults to `512`.

### `trie-dirty-cache`

*Integer*

Size of cache used for dirty trie nodes (in MBs). When the dirty nodes exceed this limit, they are written to disk. Defaults to `512`.

### `trie-dirty-commit-target`

*Integer*

Memory limit to target in the dirty cache before performing a commit (in MBs). Defaults to `20`.

### `trie-prefetcher-parallelism`

*Integer*

Max concurrent disk reads trie pre-fetcher should perform at once. Defaults to `16`.

### `snapshot-cache`

*Integer*

Size of the snapshot disk layer clean cache (in MBs). Should be a multiple of `64`. Defaults to `256`.

### `acceptor-queue-limit`

*Integer*

Specifies the maximum number of blocks to queue during block acceptance before blocking on Accept. Defaults to `64`.

### `commit-interval`

*Integer*

Specifies the commit interval at which to persist the merkle trie to disk. Defaults to `4096`.

### `pruning-enabled`

*Boolean*

If `true`, database pruning of obsolete historical data will be enabled. This reduces the amount of data written to disk, but does not delete any state that is written to the disk previously. This flag should be set to `false` for nodes that need access to all data at historical roots. Pruning will be done only for new data. Defaults to `false` in v1.4.9, and `true` in subsequent versions.

If a node is ever run with `pruning-enabled` as `false` (archival mode), setting `pruning-enabled` to `true` will result in a warning and the node will shut down. This is to protect against unintentional misconfigurations of an archival node.

To override this and switch to pruning mode, in addition to `pruning-enabled: true`, `allow-missing-tries` should be set to `true` as well.

### `populate-missing-tries`

*uint64*

If non-nil, sets the starting point for repopulating missing tries to re-generate archival merkle forest.

To restore an archival merkle forest that has been corrupted (missing trie nodes for a section of the blockchain), specify the starting point of the last block on disk, where the full trie was available at that block to re-process blocks from that height onwards and re-generate the archival merkle forest on startup. This flag should be used once to re-generate the archival merkle forest and should be removed from the config after completion. This flag will cause the node to delay starting up while it re-processes old blocks.

### `populate-missing-tries-parallelism`

*Integer*

Number of concurrent readers to use when re-populating missing tries on startup. Defaults to 1024.

### `allow-missing-tries`

*Boolean*

If `true`, allows a node that was once configured as archival to switch to pruning mode. Defaults to `false`.

### `preimages-enabled`

*Boolean*

If `true`, enables preimages. Defaults to `false`.

### `prune-warp-db-enabled`

*Boolean*

If `true`, clears the warp database on startup. Defaults to `false`.

### `offline-pruning-enabled`

*Boolean*

If `true`, offline pruning will run on startup and block until it completes (approximately one hour on Mainnet). This will reduce the size of the database by deleting old trie nodes. **While performing offline pruning, your node will not be able to process blocks and will be considered offline.** While ongoing, the pruning process consumes a small amount of additional disk space (for deletion markers and the bloom filter). For more information see [here.](https://build.avax.network/docs/nodes/maintain/reduce-disk-usage#disk-space-considerations)

Since offline pruning deletes old state data, this should not be run on nodes that need to support archival API requests.

This is meant to be run manually, so after running with this flag once, it must be toggled back to false before running the node again. Therefore, you should run with this flag set to true and then set it to false on the subsequent run.

### `offline-pruning-bloom-filter-size`

*Integer*

This flag sets the size of the bloom filter to use in offline pruning (denominated in MB and defaulting to 512 MB). The bloom filter is kept in memory for efficient checks during pruning and is also written to disk to allow pruning to resume without re-generating the bloom filter.

The active state is added to the bloom filter before iterating the DB to find trie nodes that can be safely deleted, any trie nodes not in the bloom filter are considered safe for deletion. The size of the bloom filter may impact its false positive rate, which can impact the results of offline pruning. This is an advanced parameter that has been tuned to 512 MB and should not be changed without thoughtful consideration.

### `offline-pruning-data-directory`

*String*

This flag must be set when offline pruning is enabled and sets the directory that offline pruning will use to write its bloom filter to disk. This directory should not be changed in between runs until offline pruning has completed.

### `transaction-history`

*Integer*

Number of recent blocks for which to maintain transaction lookup indices in the database. If set to 0, transaction lookup indices will be maintained for all blocks. Defaults to `0`.

### `state-history`

*Integer*

The maximum number of blocks from head whose state histories are reserved for pruning blockchains. Defaults to `32`.

### `historical-proof-query-window`

*Integer*

When running in archive mode only, the number of blocks before the last accepted block to be accepted for proof state queries. Defaults to `43200`.

### `skip-tx-indexing`

*Boolean*

If set to `true`, the node will not index transactions. TxLookupLimit can be still used to control deleting old transaction indices. Defaults to `false`.

### `inspect-database`

*Boolean*

If set to `true`, inspects the database on startup. Defaults to `false`.

## VM Networking

### `max-outbound-active-requests`

*Integer*

Specifies the maximum number of outbound VM2VM requests in flight at once. Defaults to `16`.

## Warp Configuration

### `warp-off-chain-messages`

*Array of Hex Strings*

Encodes off-chain messages (unrelated to any on-chain event ie. block or AddressedCall) that the node should be willing to sign. Note: only supports AddressedCall payloads. Defaults to empty array.

## Miscellaneous

### `skip-upgrade-check`

*Boolean*

If set to `true`, the chain will skip verifying that all expected network upgrades have taken place before the last accepted block on startup. This allows node operators to recover if their node has accepted blocks after a network upgrade with a version of the code prior to the upgrade. Defaults to `false`.

# Chain Specific Configs

URL: /docs/nodes/chain-configs

Some chains allow the node operator to provide a custom configuration. AvalancheGo can read chain configurations from files and pass them to the corresponding chains on initialization.

AvalancheGo looks for these files in the directory specified by `--chain-config-dir` AvalancheGo flag, as documented [here](/docs/nodes/configure/configs-flags#--chain-config-dir-string). If omitted, value defaults to `$HOME/.avalanchego/configs/chains`. This directory can have sub-directories whose names are chain IDs or chain aliases. Each sub-directory contains the configuration for the chain specified in the directory name. Each sub-directory should contain a file named `config`, whose value is passed in when the corresponding chain is initialized (see below for extension). For example, config for the C-Chain should be at: `{chain-config-dir}/C/config.json`.

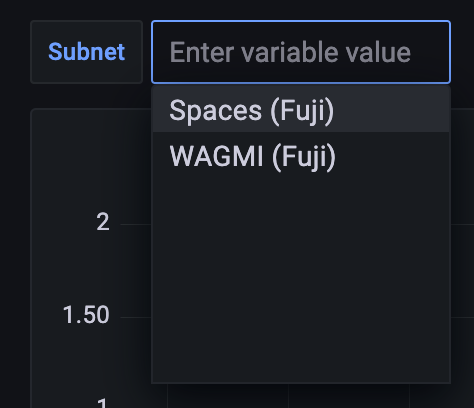

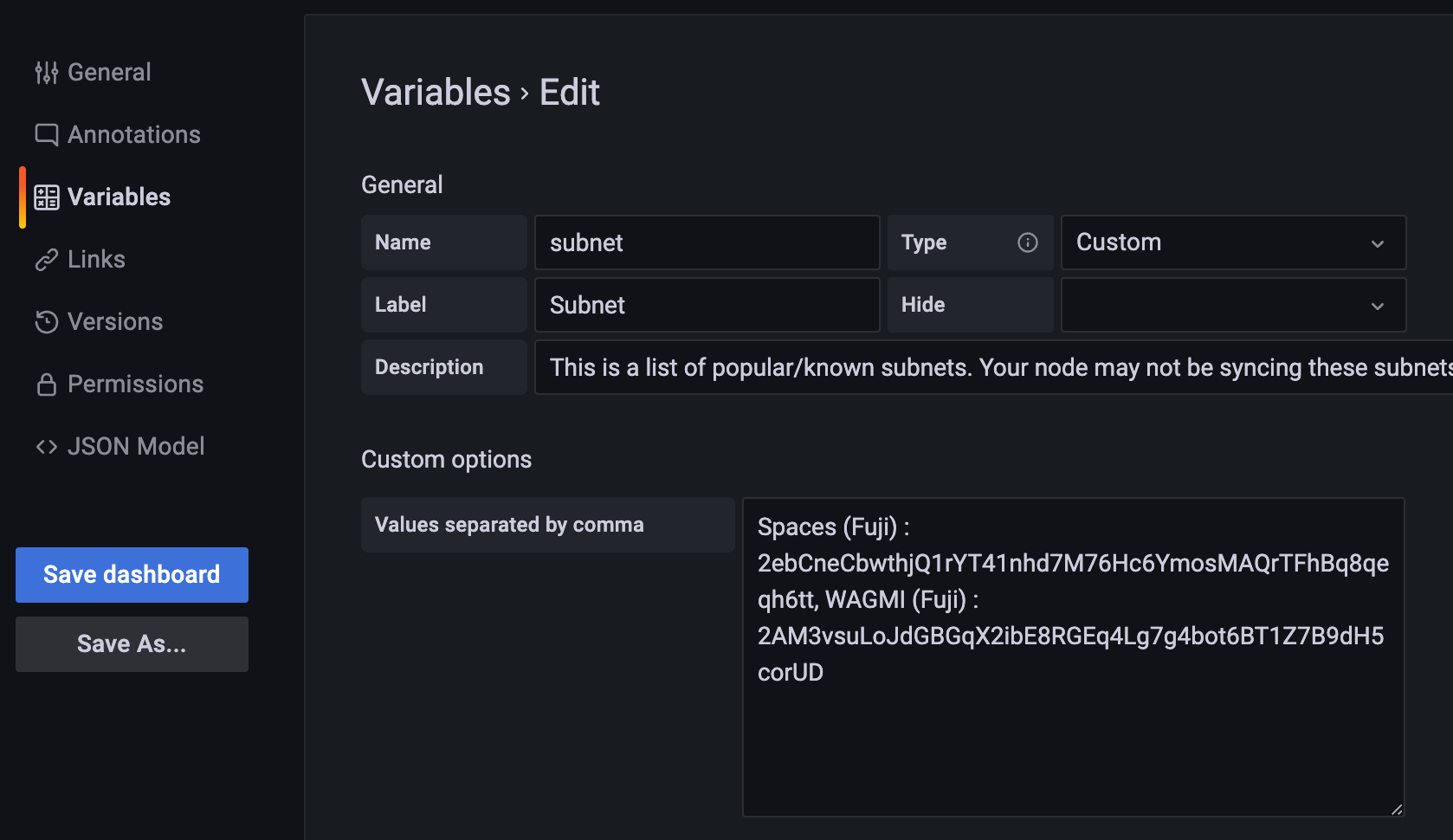

This also applies to Avalanche L1s, for example, if an Avalanche L1's chain id is `2ebCneCbwthjQ1rYT41nhd7M76Hc6YmosMAQrTFhBq8qeqh6tt`, the config for this chain should be at `{chain-config-dir}/2ebCneCbwthjQ1rYT41nhd7M76Hc6YmosMAQrTFhBq8qeqh6tt/config.json`

}>

By default, none of these directories and/or files exist. You would need to create them manually if needed.

The filename extension that these files should have, and the contents of these files, is VM-dependent. For example, some chains may expect `config.txt` while others expect `config.json`. If multiple files are provided with the same name but different extensions (for example `config.json` and `config.txt`) in the same sub-directory, AvalancheGo will exit with an error.

For a given chain, AvalancheGo will follow the sequence below to look for its config file, where all folder and file names are case sensitive:

1. First it looks for a config sub-directory whose name is the Chain ID.

2. If it isn't found, it looks for a config sub-directory whose name is the chain's primary alias.

3. If it's not found, it looks for a config sub-directory whose name is another alias for the chain

Alternatively, for some setups it might be more convenient to provide config entirely via the command line. For that, you can use AvalancheGo `--chain-config-content` flag, as documented [here](/docs/nodes/configure/configs-flags#--chain-config-content-string).

It is not required to provide these custom configurations. If they are not provided, a VM-specific default config will be used. And the values of these default config are printed when the node starts.

## Avalanche L1 Chain Configs

As mentioned above, if an Avalanche L1's chain id is `2ebCneCbwthjQ1rYT41nhd7M76Hc6YmosMAQrTFhBq8qeqh6tt`, the config for this chain should be at `{chain-config-dir}/2ebCneCbwthjQ1rYT41nhd7M76Hc6YmosMAQrTFhBq8qeqh6tt/config.json`

## FAQs

* When using `getBlockNumber` it will return finalized blocks. To allow for queries for unfinalized (not yet accepted) blocks/transactions use `allow-unfainalized-queries` and set to true (by default it is set to `false`)

* When deactivating offline pruning `(pruning-enabled: false)` from previously enabled state, this will not impact blocks whose state was already pruned. This will return missing trie node errors, as the node can't lookup the state of a historical block if that state was deleted.

# P-Chain

URL: /docs/nodes/chain-configs/p-chain

This page is an overview of the configurations and flags supported by P-Chain.

This document provides details about the configuration options available for the PlatformVM.

## Standard Configurations

In order to specify a configuration for the PlatformVM, you need to define a `Config` struct and its parameters. The default values for these parameters are:

| Option | Type | Default |

| ------------------------------------ | --------------- | ------------------ |

| `network` | `Network` | `DefaultNetwork` |

| `block-cache-size` | `int` | `64 * units.MiB` |

| `tx-cache-size` | `int` | `128 * units.MiB` |

| `transformed-subnet-tx-cache-size` | `int` | `4 * units.MiB` |

| `reward-utxos-cache-size` | `int` | `2048` |

| `chain-cache-size` | `int` | `2048` |

| `chain-db-cache-size` | `int` | `2048` |

| `block-id-cache-size` | `int` | `8192` |

| `fx-owner-cache-size` | `int` | `4 * units.MiB` |

| `subnet-to-l1-conversion-cache-size` | `int` | `4 * units.MiB` |

| `l1-weights-cache-size` | `int` | `16 * units.KiB` |

| `l1-inactive-validators-cache-size` | `int` | `256 * units.KiB` |

| `l1-subnet-id-node-id-cache-size` | `int` | `16 * units.KiB` |

| `checksums-enabled` | `bool` | `false` |

| `mempool-prune-frequency` | `time.Duration` | `30 * time.Minute` |

Default values are overridden only if explicitly specified in the config.

## Network Configuration

The Network configuration defines parameters that control the network's gossip and validator behavior.

### Parameters

| Field | Type | Default | Description |

| -------------------------------------------------- | --------------- | ------------------------- | -------------------------------------------------------------------------------------------------------------------------------- |

| `max-validator-set-staleness` | `time.Duration` | `1 minute` | Maximum age of a validator set used for peer sampling and rate limiting |

| `target-gossip-size` | `int` | `20 * units.KiB` | Target number of bytes to send when pushing transactions or responding to transaction pull requests |

| `push-gossip-percent-stake` | `float64` | `0.9` | Percentage of total stake to target in the initial gossip round. Higher stake nodes are prioritized to minimize network messages |

| `push-gossip-num-validators` | `int` | `100` | Number of validators to push transactions to in the initial gossip round |

| `push-gossip-num-peers` | `int` | `0` | Number of peers to push transactions to in the initial gossip round |

| `push-regossip-num-validators` | `int` | `10` | Number of validators for subsequent gossip rounds after the initial push |

| `push-regossip-num-peers` | `int` | `0` | Number of peers for subsequent gossip rounds after the initial push |

| `push-gossip-discarded-cache-size` | `int` | `16384` | Size of the cache storing recently dropped transaction IDs from mempool to avoid re-pushing |

| `push-gossip-max-regossip-frequency` | `time.Duration` | `30 * time.Second` | Maximum frequency limit for re-gossiping a transaction |

| `push-gossip-frequency` | `time.Duration` | `500 * time.Millisecond` | Frequency of push gossip rounds |

| `pull-gossip-poll-size` | `int` | `1` | Number of validators to sample during pull gossip rounds |

| `pull-gossip-frequency` | `time.Duration` | `1500 * time.Millisecond` | Frequency of pull gossip rounds |

| `pull-gossip-throttling-period` | `time.Duration` | `10 * time.Second` | Time window for throttling pull requests |

| `pull-gossip-throttling-limit` | `int` | `2` | Maximum number of pull queries allowed per validator within the throttling window |

| `expected-bloom-filter-elements` | `int` | `8 * 1024` | Expected number of elements when creating a new bloom filter. Larger values increase filter size |

| `expected-bloom-filter-false-positive-probability` | `float64` | `0.01` | Target probability of false positives after inserting the expected number of elements. Lower values increase filter size |

| `max-bloom-filter-false-positive-probability` | `float64` | `0.05` | Threshold for bloom filter regeneration. Filter is refreshed when false positive probability exceeds this value |

### Details

The configuration is divided into several key areas:

* **Validator Set Management**: Controls how fresh the validator set must be for network operations. The staleness setting ensures the network operates with reasonably current validator information.

* **Gossip Size Controls**: Manages the size of gossip messages to maintain efficient network usage while ensuring reliable transaction propagation.

* **Push Gossip Configuration**: Defines how transactions are initially propagated through the network, with emphasis on reaching high-stake validators first to optimize network coverage.

* **Pull Gossip Configuration**: Controls how nodes request transactions they may have missed, including throttling mechanisms to prevent network overload.

* **Bloom Filter Settings**: Configures the trade-off between memory usage and false positive rates in transaction filtering, with automatic filter regeneration when accuracy degrades.

# Subnet-EVM

URL: /docs/nodes/chain-configs/subnet-evm

Configuration options available in the Subnet EVM codebase.

These are the configuration options available in the Subnet-EVM codebase. To set these values, you need to create a configuration file at `~/.avalanchego/configs/chains//config.json`.

For the AvalancheGo node configuration options, see the [AvalancheGo Configuration](/docs/nodes/configure/avalanche-l1-configs) page.

## Airdrop

| Option | Type | Description | Default |

| --------- | -------- | ------------------------- | ------- |

| `airdrop` | `string` | Path to the airdrop file. | |

## Subnet EVM APIs

| Option | Type | Description | Default |

| ------------------------ | -------- | ----------------------------------------------------- | ------- |

| `snowman-api-enabled` | `bool` | Enables the Snowman API. | `false` |

| `admin-api-enabled` | `bool` | Enables the Admin API. | `false` |

| `admin-api-dir` | `string` | Directory for the performance profiling in Admin API. | |

| `warp-api-enabled` | `bool` | Enables the Warp API. | `false` |

| `validators-api-enabled` | `bool` | Enables the Validators API. | `true` |

## Enabled Ethereum APIs

| Option | Type | Description | Default |

| ---------- | ---------- | ---------------------------------------------------------------------------------- | --------------------------------------------------------------------------------------------------------------- |

| `eth-apis` | `[]string` | A list of Ethereum APIs to enable. If none is specified, the default list is used. | `"eth"`, `"eth-filter"`, `"net"`, `"web3"`, `"internal-eth"`, `"internal-blockchain"`, `"internal-transaction"` |

## Continuous Profiler

| Option | Type | Description | Default |

| ------------------------------- | ---------- | ------------------------------------------------------------------------ | ------------ |

| `continuous-profiler-dir` | `string` | Directory to store profiler data. If set, creates a continuous profiler. | `""` (empty) |

| `continuous-profiler-frequency` | `Duration` | Frequency to run the continuous profiler if enabled. | `15m` |

| `continuous-profiler-max-files` | `int` | Maximum number of profiler files to maintain. | `5` |

## API Gas/Price Caps

| Option | Type | Description | Default |

| ---------------- | --------- | ------------------------------------------------------ | ---------- |

| `rpc-gas-cap` | `uint64` | Maximum gas allowed in a transaction via the API. | `50000000` |

| `rpc-tx-fee-cap` | `float64` | Maximum transaction fee (in AVAX) allowed via the API. | `100.0` |

## Cache Settings

| Option | Type | Description | Default |

| ----------------------------- | ----- | ----------------------------------------------------------------------- | ------- |

| `trie-clean-cache` | `int` | Size of the trie clean cache in MB. | `512` |

| `trie-dirty-cache` | `int` | Size of the trie dirty cache in MB. | `512` |

| `trie-dirty-commit-target` | `int` | Memory limit target in the dirty cache before performing a commit (MB). | `20` |

| `trie-prefetcher-parallelism` | `int` | Max concurrent disk reads the trie prefetcher should perform at once. | `16` |

| `snapshot-cache` | `int` | Size of the snapshot disk layer clean cache in MB. | `256` |

## Ethereum Settings

| Option | Type | Description | Default |

| ------------------------------- | ------ | --------------------------------------------------- | ------- |

| `preimages-enabled` | `bool` | Enables preimage storage. | `false` |

| `snapshot-wait` | `bool` | Waits for snapshot generation before starting node. | `false` |

| `snapshot-verification-enabled` | `bool` | Enables snapshot verification. | `false` |

## Pruning Settings

| Option | Type | Description | Default |

| ------------------------------------ | --------- | ------------------------------------------------------------------ | ------- |

| `pruning-enabled` | `bool` | If enabled, trie roots are only persisted every N blocks. | `true` |

| `accepted-queue-limit` | `int` | Maximum blocks to queue before blocking during acceptance. | `64` |

| `commit-interval` | `uint64` | Commit interval at which to persist EVM and atomic tries. | `4096` |

| `allow-missing-tries` | `bool` | Suppresses warnings for incomplete trie index if enabled. | `false` |

| `populate-missing-tries` | `*uint64` | Starting point for re-populating missing tries; disables if `nil`. | `nil` |

| `populate-missing-tries-parallelism` | `int` | Concurrent readers when re-populating missing tries on startup. | `1024` |

| `prune-warp-db-enabled` | `bool` | Determines if the warpDB should be cleared on startup. | `false` |

## Metric Settings

| Option | Type | Description | Default |

| --------------------------- | ------ | -------------------------------------------------------- | ------- |

| `metrics-expensive-enabled` | `bool` | Enables debug-level metrics that may impact performance. | `true` |

### Transaction Pool Settings

| Option | Type | Description | Default |

| ----------------------- | ---------- | ----------------------------------------------------------------- | -------------------------------------------- |

| `tx-pool-price-limit` | `uint64` | Minimum gas price (in wei) for the transaction pool. | 1 |

| `tx-pool-price-bump` | `uint64` | Minimum price bump percentage to replace an existing transaction. | 10 |

| `tx-pool-account-slots` | `uint64` | Max executable transaction slots per account. | 16 |

| `tx-pool-global-slots` | `uint64` | Max executable transaction slots for all accounts. | From `legacypool.DefaultConfig.GlobalSlots` |

| `tx-pool-account-queue` | `uint64` | Max non-executable transaction slots per account. | From `legacypool.DefaultConfig.AccountQueue` |

| `tx-pool-global-queue` | `uint64` | Max non-executable transaction slots for all accounts. | 1024 |

| `tx-pool-lifetime` | `Duration` | Maximum time a transaction can remain in the pool. | 10 Minutes |

| `local-txs-enabled` | `bool` | Enables local transactions. | `false` |

### API Resource Limiting Settings

| Option | Type | Description | Default |

| ----------------------------- | --------------- | ----------------------------------------------- | ----------------- |

| `api-max-duration` | `Duration` | Maximum API call duration. | `0` (no limit) |

| `ws-cpu-refill-rate` | `Duration` | CPU time refill rate for WebSocket connections. | `0` (no limit) |

| `ws-cpu-max-stored` | `Duration` | Max CPU time stored for WebSocket connections. | `0` (no limit) |

| `api-max-blocks-per-request` | `int64` | Max blocks per `getLogs` request. | `0` (no limit) |

| `allow-unfinalized-queries` | `bool` | Allows queries on unfinalized blocks. | `false` |

| `allow-unprotected-txs` | `bool` | Allows unprotected (non-EIP-155) transactions. | `false` |

| `allow-unprotected-tx-hashes` | `[]common.Hash` | List of unprotected transaction hashes allowed. | Includes EIP-1820 |

## Keystore Settings

| Option | Type | Description | Default |

| ---------------------------------- | -------- | --------------------------------------- | ------------ |

| `keystore-directory` | `string` | Directory for keystore files. | `""` (empty) |

| `keystore-external-signer` | `string` | External signer for keystore. | `""` (empty) |

| `keystore-insecure-unlock-allowed` | `bool` | Allows insecure unlock of the keystore. | `false` |

## Gossip Settings

| Option | Type | Description | Default |

| ------------------------------ | ------------------ | --------------------------------------------- | ------------ |

| `push-gossip-percent-stake` | `float64` | Percentage of stake to target when gossiping. | `0.9` |

| `push-gossip-num-validators` | `int` | Number of validators to gossip to. | `100` |

| `push-gossip-num-peers` | `int` | Number of peers to gossip to. | `0` |

| `push-regossip-num-validators` | `int` | Number of validators to re-gossip to. | `10` |

| `push-regossip-num-peers` | `int` | Number of peers to re-gossip to. | `0` |

| `push-gossip-frequency` | `Duration` | Frequency of gossiping. | `100ms` |

| `pull-gossip-frequency` | `Duration` | Frequency of pulling gossip. | `1s` |

| `regossip-frequency` | `Duration` | Frequency of re-gossiping. | `30s` |

| `priority-regossip-addresses` | `[]common.Address` | Addresses with priority for re-gossiping. | `[]` (empty) |

## Logging

| Option | Type | Description | Default |

| ----------------- | -------- | ----------------------------------- | -------- |

| `log-level` | `string` | Logging level. | `"info"` |

| `log-json-format` | `bool` | If `true`, logs are in JSON format. | `false` |

## Fee Recipient

| Option | Type | Description | Default |

| -------------- | -------- | ------------------------------------------------------------------ | ------------ |

| `feeRecipient` | `string` | Address to receive transaction fees; must be empty if unsupported. | `""` (empty) |

## Offline Pruning Settings

| Option | Type | Description | Default |

| ----------------------------------- | -------- | -------------------------------------------- | ------------ |

| `offline-pruning-enabled` | `bool` | Enables offline pruning. | `false` |

| `offline-pruning-bloom-filter-size` | `uint64` | Bloom filter size for offline pruning in MB. | `512` |

| `offline-pruning-data-directory` | `string` | Data directory for offline pruning. | `""` (empty) |

## VM2VM Network

| Option | Type | Description | Default |

| ------------------------------ | ------- | --------------------------------------- | ------- |

| `max-outbound-active-requests` | `int64` | Max number of outbound active requests. | `16` |

## Sync Settings

| Option | Type | Description | Default |

| ------------------------------ | -------- | -------------------------------------------------------------- | --------------------------- |

| `state-sync-enabled` | `bool` | Enables state synchronization. | `false` |

| `state-sync-skip-resume` | `bool` | Forces state sync to use highest available summary block. | `false` |

| `state-sync-server-trie-cache` | `int` | Cache size for state sync server trie in MB. | `64` |

| `state-sync-ids` | `string` | Node IDs for state sync. | `""` (empty) |

| `state-sync-commit-interval` | `uint64` | Commit interval for state sync. | `16384` (CommitInterval\*4) |

| `state-sync-min-blocks` | `uint64` | Min blocks ahead of local last accepted to perform state sync. | `300000` |

| `state-sync-request-size` | `uint16` | Key/values per request during state sync. | `1024` |

## Database Settings

| Option | Type | Description | Default |

| ------------------------- | ----------------- | ----------------------------------------------------------------- | ----------------- |

| `inspect-database` | `bool` | Inspects the database on startup if enabled. | `false` |

| `skip-upgrade-check` | `bool` | Disables checking that upgrades occur before last accepted block. | `false` |

| `accepted-cache-size` | `int` | Depth to keep in the accepted headers and logs cache. | `32` |

| `transaction-history` | `uint64` | Max blocks from head whose transaction indices are reserved. | `0` (no limit) |

| `tx-lookup-limit` | `uint64` | **Deprecated**, use `transaction-history` instead. | |

| `skip-tx-indexing` | `bool` | Skips indexing transactions; useful for non-indexing nodes. | `false` |

| `warp-off-chain-messages` | `[]hexutil.Bytes` | Encoded off-chain messages to sign. | `[]` (empty list) |

## RPC Settings

| Option | Type | Description | Default |

| ----------------- | -------- | --------------------------------- | ------------- |

| `http-body-limit` | `uint64` | Limit for HTTP request body size. | Not specified |

## Standalone Database Configuration

| Option | Type | Description | Default |

| ------------------------- | -------- | ----------------------------------------------------------------------------------------------------- | ------------ |

| `use-standalone-database` | `*PBool` | Use a standalone database. By default Subnet-EVM uses a standalone database if no block was accepted. | `nil` |

| `database-config` | `string` | Content of the database configuration. | `""` (empty) |

| `database-config-file` | `string` | Path to the database configuration file. | `""` (empty) |

| `database-type` | `string` | Type of database to use. | `"pebbledb"` |

| `database-path` | `string` | Path to the database. | `""` (empty) |

| `database-read-only` | `bool` | Opens the database in read-only mode. | `false` |

***

**Note**: Durations can be specified using time units, e.g., `15m` for 15 minutes, `100ms` for 100 milliseconds.

# X-Chain

URL: /docs/nodes/chain-configs/x-chain

In order to specify a config for the X-Chain, a JSON config file should be

placed at `{chain-config-dir}/X/config.json`.

For example if `chain-config-dir` has the default value which is

`$HOME/.avalanchego/configs/chains`, then `config.json` can be placed at

`$HOME/.avalanchego/configs/chains/X/config.json`.

This allows you to specify a config to be passed into the X-Chain. The default

values for this config are:

```json

{

"index-transactions": false,

"index-allow-incomplete": false,

"checksums-enabled": false

}

```

Default values are overridden only if explicitly specified in the config.

The parameters are as follows:

## Transaction Indexing

### `index-transactions`

*Boolean*

Enables AVM transaction indexing if set to `true`.

When set to `true`, AVM transactions are indexed against the `address` and

`assetID` involved. This data is available via `avm.getAddressTxs`

[API](/docs/api-reference/x-chain/api#avmgetaddresstxs).

If `index-transactions` is set to true, it must always be set to true

for the node's lifetime. If set to `false` after having been set to `true`, the

node will refuse to start unless `index-allow-incomplete` is also set to `true`

(see below).

### `index-allow-incomplete`

*Boolean*

Allows incomplete indices. This config value is ignored if there is no X-Chain indexed data in the DB and

`index-transactions` is set to `false`.

### `checksums-enabled`

*Boolean*

Enables checksums if set to `true`.

# Avalanche L1 Configs

URL: /docs/nodes/configure/avalanche-l1-configs

This page describes the configuration options available for Avalanche L1s.

It is possible to provide parameters for a Subnet. Parameters here apply to all

chains in the specified Subnet.

AvalancheGo looks for files specified with `{subnetID}.json` under

`--subnet-config-dir` as documented

[here](https://build.avax.network/docs/nodes/configure/configs-flags#subnet-configs).

Here is an example of Subnet config file:

```json

{

"validatorOnly": false,

"consensusParameters": {

"k": 25,

"alpha": 18

}

}

```

## Parameters

### Private Subnet

#### `validatorOnly` (bool)

If `true` this node does not expose Subnet blockchain contents to non-validators

via P2P messages. Defaults to `false`.

Avalanche Subnets are public by default. It means that every node can sync and

listen ongoing transactions/blocks in Subnets, even they're not validating the

listened Subnet.

Subnet validators can choose not to publish contents of blockchains via this

configuration. If a node sets `validatorOnly` to true, the node exchanges

messages only with this Subnet's validators. Other peers will not be able to

learn contents of this Subnet from this node.

:::tip

This is a node-specific configuration. Every validator of this Subnet has to use

this configuration in order to create a full private Subnet.

:::

#### `allowedNodes` (string list)

If `validatorOnly=true` this allows explicitly specified NodeIDs to be allowed

to sync the Subnet regardless of validator status. Defaults to be empty.

:::tip

This is a node-specific configuration. Every validator of this Subnet has to use

this configuration in order to properly allow a node in the private Subnet.

:::

### Consensus Parameters

Subnet configs supports loading new consensus parameters. JSON keys are

different from their matching `CLI` keys. These parameters must be grouped under

`consensusParameters` key. The consensus parameters of a Subnet default to the

same values used for the Primary Network, which are given [CLI Snow Parameters](https://build.avax.network/docs/nodes/configure/configs-flags#snow-parameters).

| CLI Key | JSON Key |

| :--------------------------- | :-------------------- |

| --snow-sample-size | k |

| --snow-quorum-size | alpha |

| --snow-commit-threshold | `beta` |

| --snow-concurrent-repolls | concurrentRepolls |

| --snow-optimal-processing | `optimalProcessing` |

| --snow-max-processing | maxOutstandingItems |

| --snow-max-time-processing | maxItemProcessingTime |

| --snow-avalanche-batch-size | `batchSize` |

| --snow-avalanche-num-parents | `parentSize` |

#### `proposerMinBlockDelay` (duration)

The minimum delay performed when building snowman++ blocks. Default is set to 1 second.

As one of the ways to control network congestion, Snowman++ will only build a

block `proposerMinBlockDelay` after the parent block's timestamp. Some

high-performance custom VM may find this too strict. This flag allows tuning the

frequency at which blocks are built.

### Gossip Configs

It's possible to define different Gossip configurations for each Subnet without

changing values for Primary Network. JSON keys of these

parameters are different from their matching `CLI` keys. These parameters

default to the same values used for the Primary Network. For more information

see [CLI Gossip Configs](https://build.avax.network/docs/nodes/configure/configs-flags#gossiping).

| CLI Key | JSON Key |

| :------------------------------------------------------ | :------------------------------------- |

| --consensus-accepted-frontier-gossip-validator-size | gossipAcceptedFrontierValidatorSize |

| --consensus-accepted-frontier-gossip-non-validator-size | gossipAcceptedFrontierNonValidatorSize |

| --consensus-accepted-frontier-gossip-peer-size | gossipAcceptedFrontierPeerSize |

| --consensus-on-accept-gossip-validator-size | gossipOnAcceptValidatorSize |

| --consensus-on-accept-gossip-non-validator-size | gossipOnAcceptNonValidatorSize |

| --consensus-on-accept-gossip-peer-size | gossipOnAcceptPeerSize |

# AvalancheGo Config Flags

URL: /docs/nodes/configure/configs-flags

This page lists all available configuration options for AvalancheGo nodes.

{/* markdownlint-disable MD001 */}

You can specify the configuration of a node with the arguments below.

## APIs

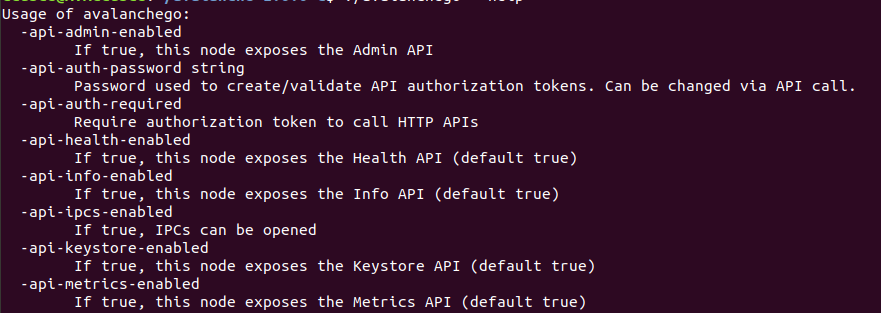

#### `--api-admin-enabled` (boolean)

If set to `true`, this node will expose the Admin API. Defaults to `false`.

See [here](https://build.avax.network/docs/api-reference/admin-api) for more information.

#### `--api-health-enabled` (boolean)

If set to `false`, this node will not expose the Health API. Defaults to `true`. See

[here](https://build.avax.network/docs/api-reference/health-api) for more information.

#### `--index-enabled` (boolean)

If set to `true`, this node will enable the indexer and the Index API will be

available. Defaults to `false`. See

[here](https://build.avax.network/docs/api-reference/index-api) for more information.

#### `--api-info-enabled` (boolean)

If set to `false`, this node will not expose the Info API. Defaults to `true`. See

[here](https://build.avax.network/docs/api-reference/info-api) for more information.

#### `--api-metrics-enabled` (boolean)

If set to `false`, this node will not expose the Metrics API. Defaults to

`true`. See [here](https://build.avax.network/docs/api-reference/metrics-api) for more information.

## Avalanche Community Proposals

#### `--acp-support` (array of integers)

The `--acp-support` flag allows an AvalancheGo node to indicate support for a

set of [Avalanche Community Proposals](https://github.com/avalanche-foundation/ACPs).

#### `--acp-object` (array of integers)

The `--acp-object` flag allows an AvalancheGo node to indicate objection for a

set of [Avalanche Community Proposals](https://github.com/avalanche-foundation/ACPs).

## Bootstrapping

#### `--bootstrap-ancestors-max-containers-sent` (uint)

Max number of containers in an `Ancestors` message sent by this node. Defaults to `2000`.

#### `--bootstrap-ancestors-max-containers-received` (unit)

This node reads at most this many containers from an incoming `Ancestors` message. Defaults to `2000`.

#### `--bootstrap-beacon-connection-timeout` (duration)

Timeout when attempting to connect to bootstrapping beacons. Defaults to `1m`.

#### `--bootstrap-ids` (string)

Bootstrap IDs is a comma-separated list of validator IDs. These IDs will be used

to authenticate bootstrapping peers. An example setting of this field would be

`--bootstrap-ids="NodeID-7Xhw2mDxuDS44j42TCB6U5579esbSt3Lg,NodeID-MFrZFVCXPv5iCn6M9K6XduxGTYp891xXZ"`.

The number of given IDs here must be same with number of given

`--bootstrap-ips`. The default value depends on the network ID.

#### `--bootstrap-ips` (string)

Bootstrap IPs is a comma-separated list of IP:port pairs. These IP Addresses

will be used to bootstrap the current Avalanche state. An example setting of

this field would be `--bootstrap-ips="127.0.0.1:12345,1.2.3.4:5678"`. The number

of given IPs here must be same with number of given `--bootstrap-ids`. The

default value depends on the network ID.

#### `--bootstrap-max-time-get-ancestors` (duration)

Max Time to spend fetching a container and its ancestors when responding to a GetAncestors message.

Defaults to `50ms`.

#### `--bootstrap-retry-enabled` (boolean)

If set to `false`, will not retry bootstrapping if it fails. Defaults to `true`.

#### `--bootstrap-retry-warn-frequency` (uint)

Specifies how many times bootstrap should be retried before warning the operator. Defaults to `50`.

## Chain Configs

Some blockchains allow the node operator to provide custom configurations for

individual blockchains. These custom configurations are broken down into two

categories: network upgrades and optional chain configurations. AvalancheGo

reads in these configurations from the chain configuration directory and passes

them into the VM on initialization.

#### `--chain-config-dir` (string)

Specifies the directory that contains chain configs, as described

[here](https://build.avax.network/docs/nodes/chain-configs). Defaults to `$HOME/.avalanchego/configs/chains`.

If this flag is not provided and the default directory does not exist,

AvalancheGo will not exit since custom configs are optional. However, if the

flag is set, the specified folder must exist, or AvalancheGo will exit with an

error. This flag is ignored if `--chain-config-content` is specified.

:::note

Please replace `chain-config-dir` and `blockchainID` with their actual values.

:::

Network upgrades are passed in from the location:

`chain-config-dir`/`blockchainID`/`upgrade.*`.

Upgrade files are typically json encoded and therefore named `upgrade.json`.

However, the format of the file is VM dependent.

After a blockchain has activated a network upgrade, the same upgrade

configuration must always be passed in to ensure that the network upgrades

activate at the correct time.

The chain configs are passed in from the location

`chain-config-dir`/`blockchainID`/`config.*`.

Upgrade files are typically json encoded and therefore named `upgrade.json`.

However, the format of the file is VM dependent.

This configuration is used by the VM to handle optional configuration flags such

as enabling/disabling APIs, updating log level, etc.

The chain configuration is intended to provide optional configuration parameters

and the VM will use default values if nothing is passed in.

Full reference for all configuration options for some standard chains can be

found in a separate [chain config flags](https://build.avax.network/docs/nodes/chain-configs) document.

Full reference for `subnet-evm` upgrade configuration can be found in a separate

[Customize a Subnet](https://build.avax.network/docs/avalanche-l1s/upgrade/customize-avalanche-l1) document.

#### `--chain-config-content` (string)

As an alternative to `--chain-config-dir`, chains custom configurations can be

loaded altogether from command line via `--chain-config-content` flag. Content

must be base64 encoded.

Example:

```bash

cchainconfig="$(echo -n '{"log-level":"trace"}' | base64)"

chainconfig="$(echo -n "{\"C\":{\"Config\":\"${cchainconfig}\",\"Upgrade\":null}}" | base64)"

avalanchego --chain-config-content "${chainconfig}"

```

#### `--chain-aliases-file` (string)

Path to JSON file that defines aliases for Blockchain IDs. Defaults to

`~/.avalanchego/configs/chains/aliases.json`. This flag is ignored if

`--chain-aliases-file-content` is specified. Example content:

```json

{

"q2aTwKuyzgs8pynF7UXBZCU7DejbZbZ6EUyHr3JQzYgwNPUPi": ["DFK"]

}

```

The above example aliases the Blockchain whose ID is

`"q2aTwKuyzgs8pynF7UXBZCU7DejbZbZ6EUyHr3JQzYgwNPUPi"` to `"DFK"`. Chain

aliases are added after adding primary network aliases and before any changes to

the aliases via the admin API. This means that the first alias included for a

Blockchain on a Subnet will be treated as the `"Primary Alias"` instead of the

full blockchainID. The Primary Alias is used in all metrics and logs.

#### `--chain-aliases-file-content` (string)

As an alternative to `--chain-aliases-file`, it allows specifying base64 encoded

aliases for Blockchains.

#### `--chain-data-dir` (string)

Chain specific data directory. Defaults to `$HOME/.avalanchego/chainData`.

## Config File

#### `--config-file` (string)

Path to a JSON file that specifies this node's configuration. Command line

arguments will override arguments set in the config file. This flag is ignored

if `--config-file-content` is specified.

Example JSON config file:

```json

{

"log-level": "debug"

}

```

:::tip

[Install Script](https://build.avax.network/docs/tooling/avalanche-go-installer) creates the

node config file at `~/.avalanchego/configs/node.json`. No default file is

created if [AvalancheGo is built from source](https://build.avax.network/docs/nodes/run-a-node/from-source), you

would need to create it manually if needed.

:::

#### `--config-file-content` (string)

As an alternative to `--config-file`, it allows specifying base64 encoded config

content.

#### `--config-file-content-type` (string)

Specifies the format of the base64 encoded config content. JSON, TOML, YAML are

among currently supported file format (see

[here](https://github.com/spf13/viper#reading-config-files) for full list). Defaults to `JSON`.

## Data Directory

#### `--data-dir` (string)

Sets the base data directory where default sub-directories will be placed unless otherwise specified.

Defaults to `$HOME/.avalanchego`.

## Database

##### `--db-dir` (string, file path)

Specifies the directory to which the database is persisted. Defaults to `"$HOME/.avalanchego/db"`.

##### `--db-type` (string)

Specifies the type of database to use. Must be one of `leveldb`, `memdb`, or `pebbledb`.

`memdb` is an in-memory, non-persisted database.

:::note

`memdb` stores everything in memory. So if you have a 900 GiB LevelDB instance, then using `memdb`

you’d need 900 GiB of RAM.

`memdb` is useful for fast one-off testing, not for running an actual node (on Fuji or Mainnet).

Also note that `memdb` doesn’t persist after restart. So any time you restart the node it would

start syncing from scratch.

:::

### Database Config

#### `--db-config-file` (string)

Path to the database config file. Ignored if `--config-file-content` is specified.

#### `--db-config-file-content` (string)

As an alternative to `--db-config-file`, it allows specifying base64 encoded database config content.

#### LevelDB Config

A LevelDB config file must be JSON and may have these keys.

Any keys not given will receive the default value.

```go

{

// BlockCacheCapacity defines the capacity of the 'sorted table' block caching.

// Use -1 for zero.

//

// The default value is 12MiB.

"blockCacheCapacity": int,

// BlockSize is the minimum uncompressed size in bytes of each 'sorted table'

// block.

//

// The default value is 4KiB.

"blockSize": int,

// CompactionExpandLimitFactor limits compaction size after expanded.

// This will be multiplied by table size limit at compaction target level.

//

// The default value is 25.

"compactionExpandLimitFactor": int,

// CompactionGPOverlapsFactor limits overlaps in grandparent (Level + 2)

// that a single 'sorted table' generates. This will be multiplied by

// table size limit at grandparent level.

//

// The default value is 10.

"compactionGPOverlapsFactor": int,

// CompactionL0Trigger defines number of 'sorted table' at level-0 that will

// trigger compaction.

//

// The default value is 4.

"compactionL0Trigger": int,

// CompactionSourceLimitFactor limits compaction source size. This doesn't apply to

// level-0.

// This will be multiplied by table size limit at compaction target level.

//

// The default value is 1.

"compactionSourceLimitFactor": int,

// CompactionTableSize limits size of 'sorted table' that compaction generates.

// The limits for each level will be calculated as:

// CompactionTableSize * (CompactionTableSizeMultiplier ^ Level)

// The multiplier for each level can also fine-tuned using CompactionTableSizeMultiplierPerLevel.

//

// The default value is 2MiB.

"compactionTableSize": int,

// CompactionTableSizeMultiplier defines multiplier for CompactionTableSize.

//

// The default value is 1.

"compactionTableSizeMultiplier": float,

// CompactionTableSizeMultiplierPerLevel defines per-level multiplier for

// CompactionTableSize.

// Use zero to skip a level.

//

// The default value is nil.

"compactionTableSizeMultiplierPerLevel": []float,

// CompactionTotalSize limits total size of 'sorted table' for each level.

// The limits for each level will be calculated as:

// CompactionTotalSize * (CompactionTotalSizeMultiplier ^ Level)

// The multiplier for each level can also fine-tuned using

// CompactionTotalSizeMultiplierPerLevel.

//

// The default value is 10MiB.

"compactionTotalSize": int,

// CompactionTotalSizeMultiplier defines multiplier for CompactionTotalSize.

//

// The default value is 10.

"compactionTotalSizeMultiplier": float,

// DisableSeeksCompaction allows disabling 'seeks triggered compaction'.

// The purpose of 'seeks triggered compaction' is to optimize database so

// that 'level seeks' can be minimized, however this might generate many

// small compaction which may not preferable.

//

// The default is true.

"disableSeeksCompaction": bool,

// OpenFilesCacheCapacity defines the capacity of the open files caching.

// Use -1 for zero, this has same effect as specifying NoCacher to OpenFilesCacher.

//

// The default value is 1024.

"openFilesCacheCapacity": int,

// WriteBuffer defines maximum size of a 'memdb' before flushed to

// 'sorted table'. 'memdb' is an in-memory DB backed by an on-disk

// unsorted journal.

//

// LevelDB may held up to two 'memdb' at the same time.

//